Nodes Browser

ComfyDeploy: How FM_nodes works in ComfyUI?

What is FM_nodes?

A collection of ComfyUI nodes. Including: WFEN, RealViFormer, ProPIH

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

FM_nodesand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

FM_nodes

A collection of ComfyUI nodes.

Click name to jump to workflow

- WFEN Face Restore. Paper: Efficient Face Super-Resolution via Wavelet-based Feature Enhancement Network

- RealViformer - Paper: Investigating Attention for Real-World Video Super-Resolution

- ProPIH. Paper: Progressive Painterly Image Harmonization from Low-level Styles to High-level Styles

- CoLIE. Paper: Fast Context-Based Low-Light Image Enhancement via Neural Implicit Representations

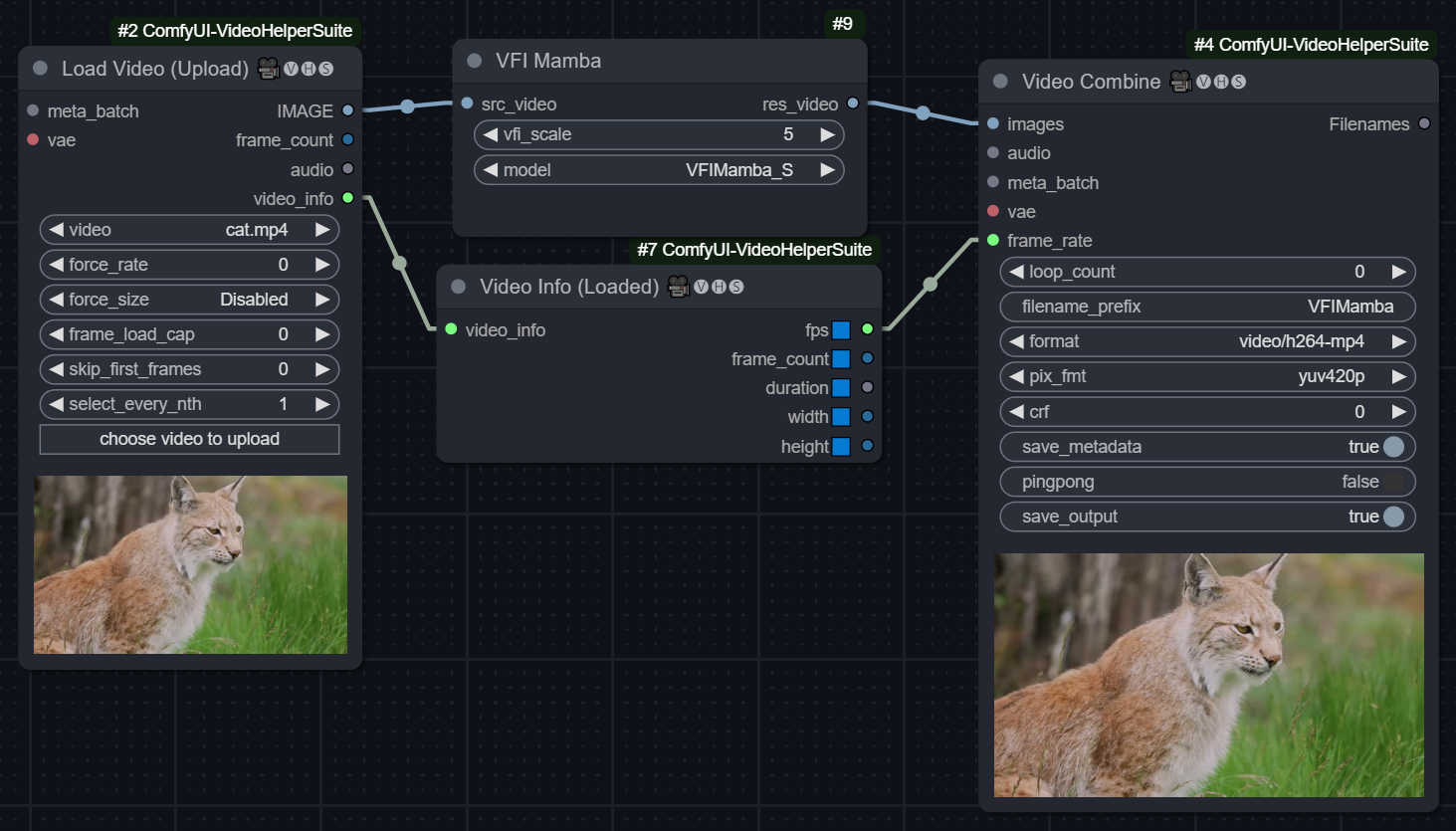

- VFIMamba. Paper: Video Frame Interpolation with State Space Models

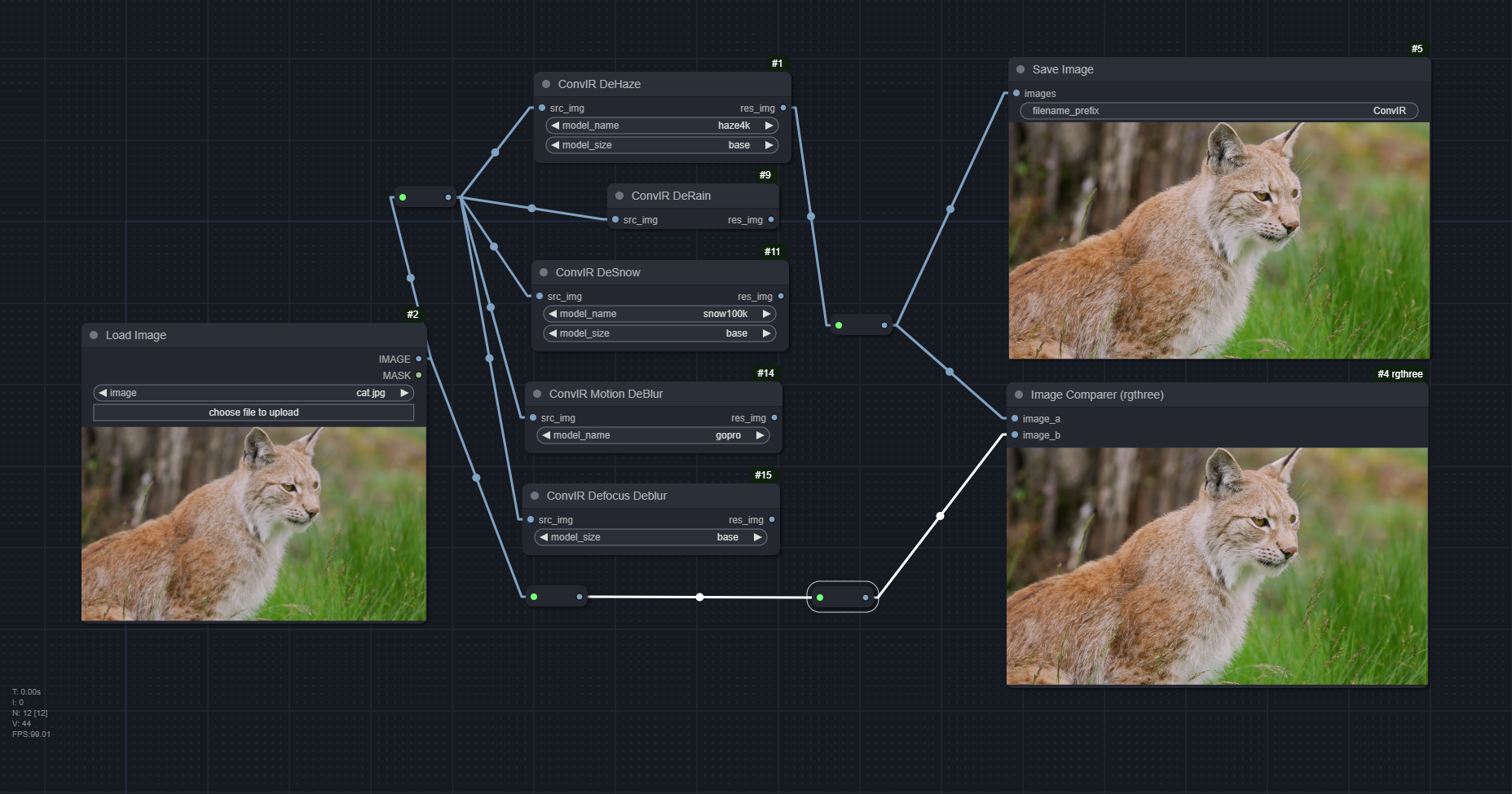

- ConvIR. Paper: Revitalizing Convolutional Network for Image Restoration

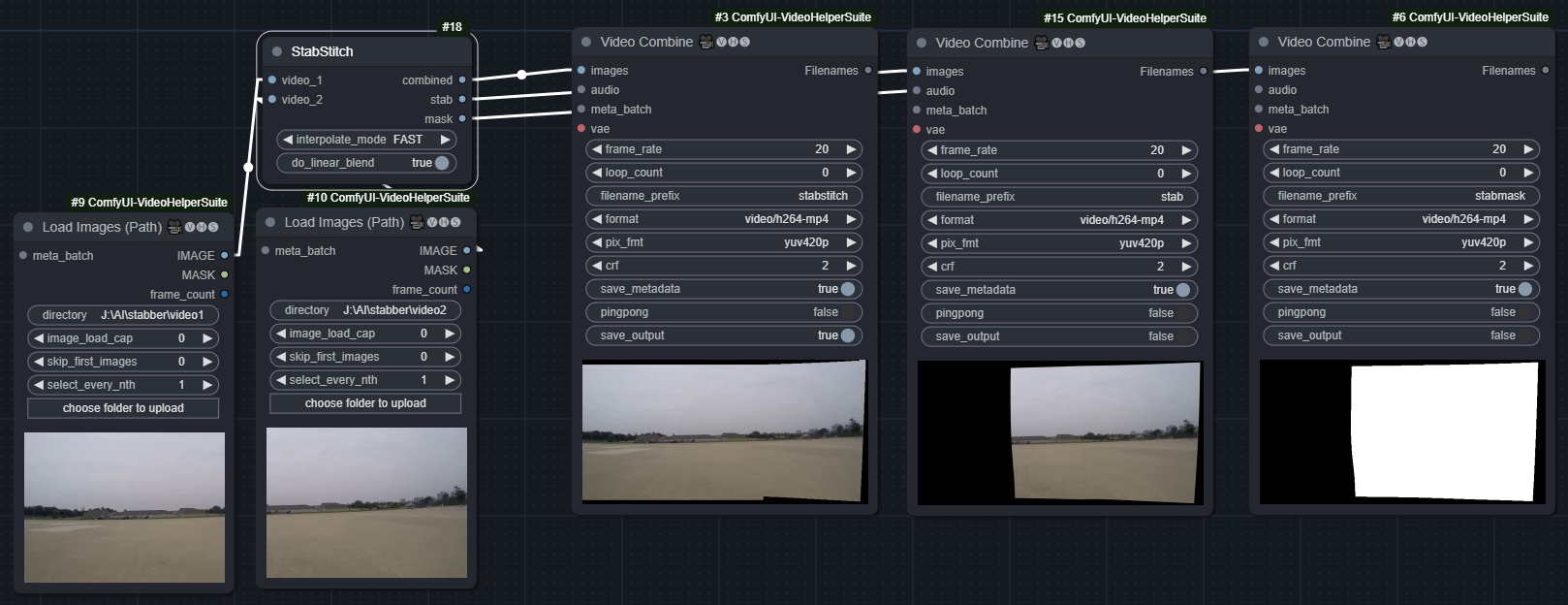

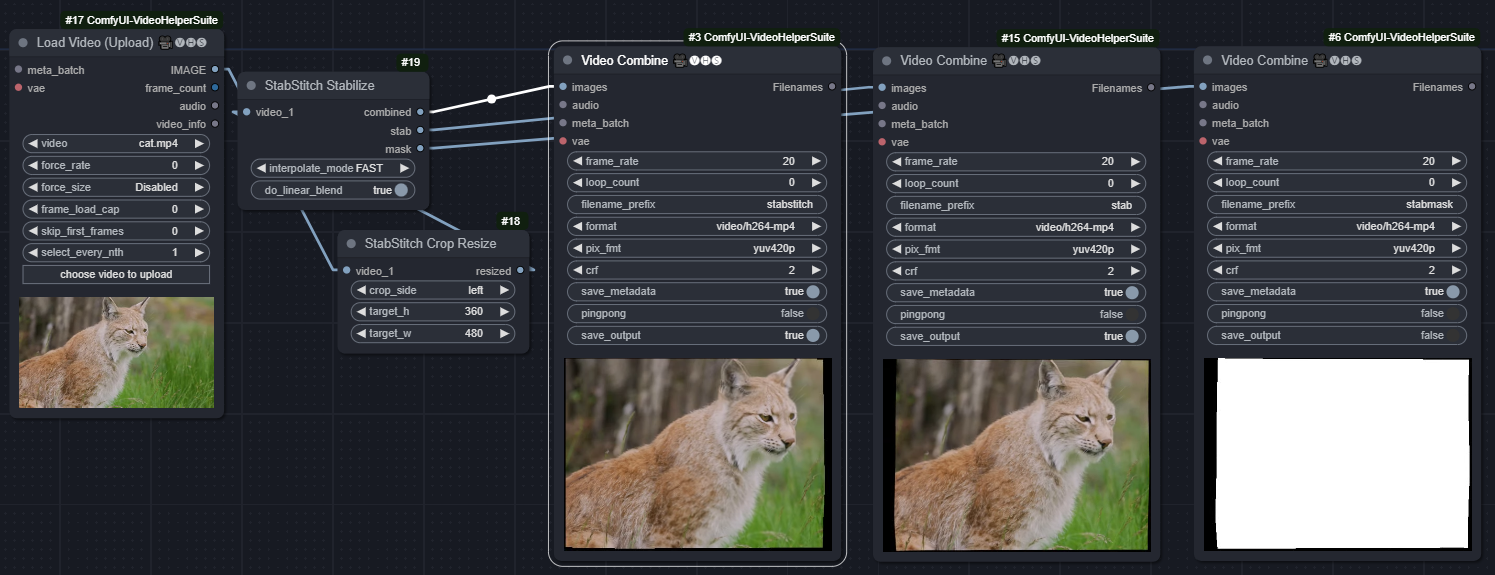

- StabStitch. Paper: Eliminating Warping Shakes for Unsupervised Online Video Stitching

Workflows

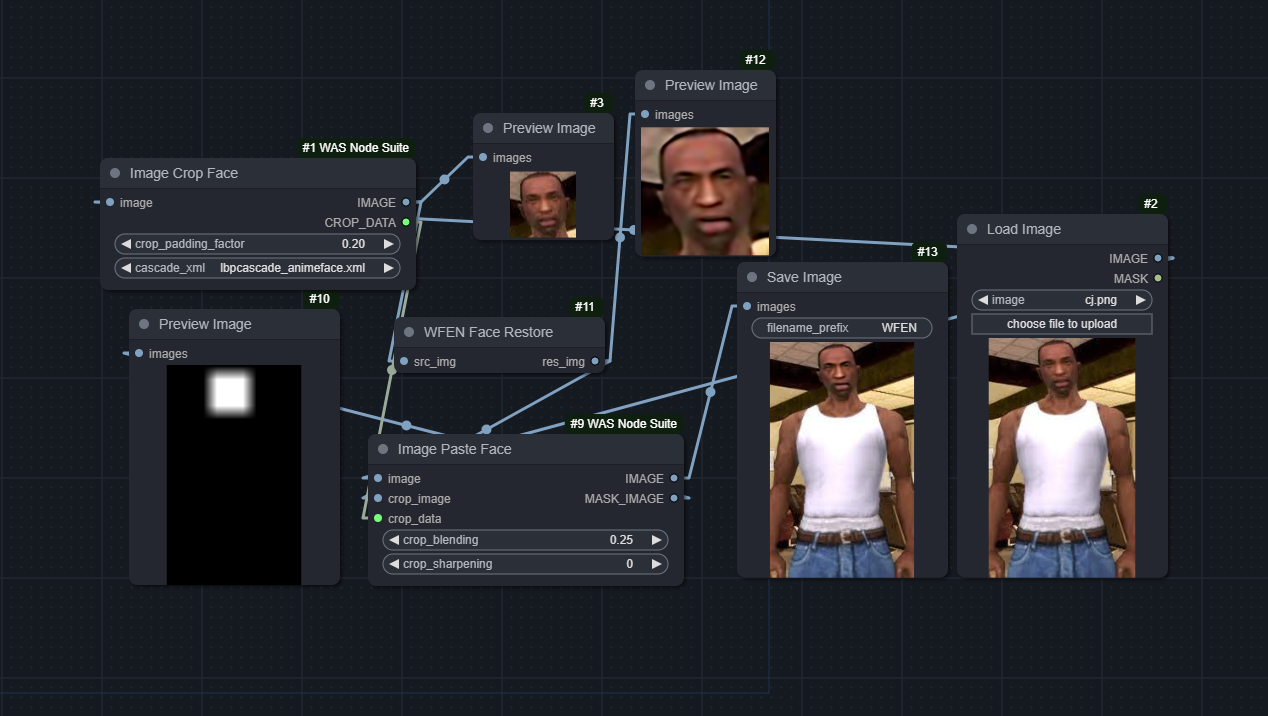

WFEN

Download the model here and place it in models/wfen/WFEN.pth.

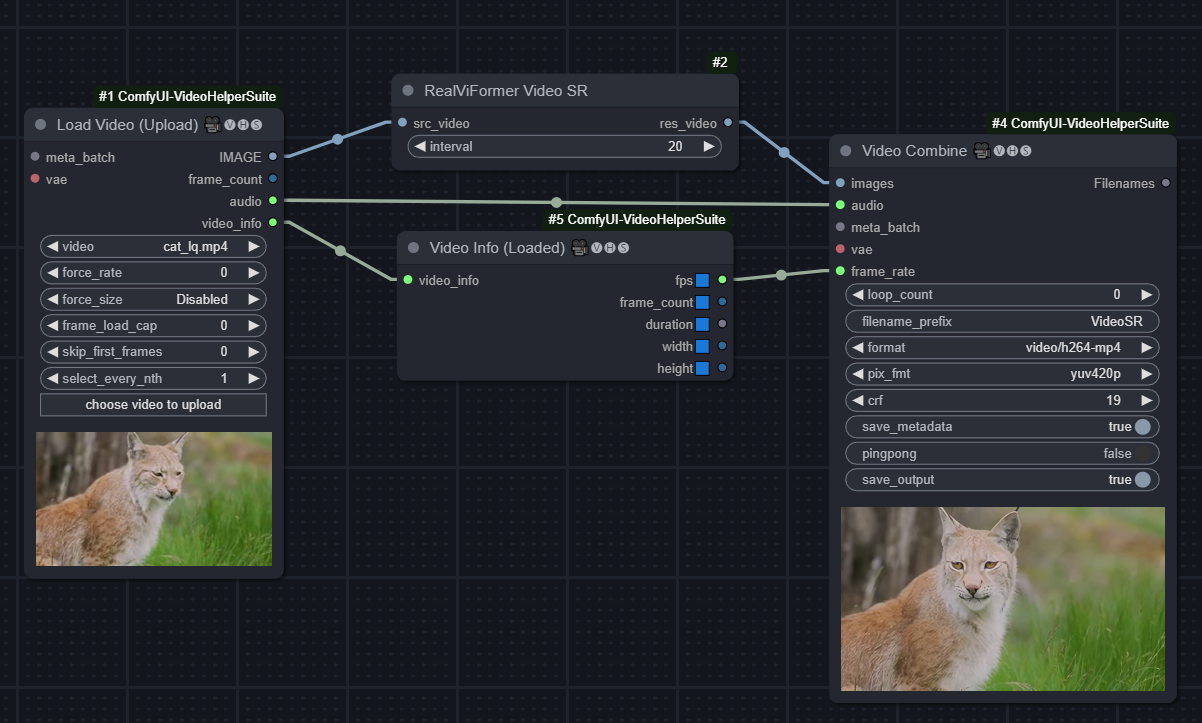

RealViformer

Download the model here and place it in models/realviformer/weights.pth.

(Not a workflow-embedded image)

https://github.com/user-attachments/assets/e89003c0-7be5-4263-b281-fd609807cea1

RealViFormer upscale example

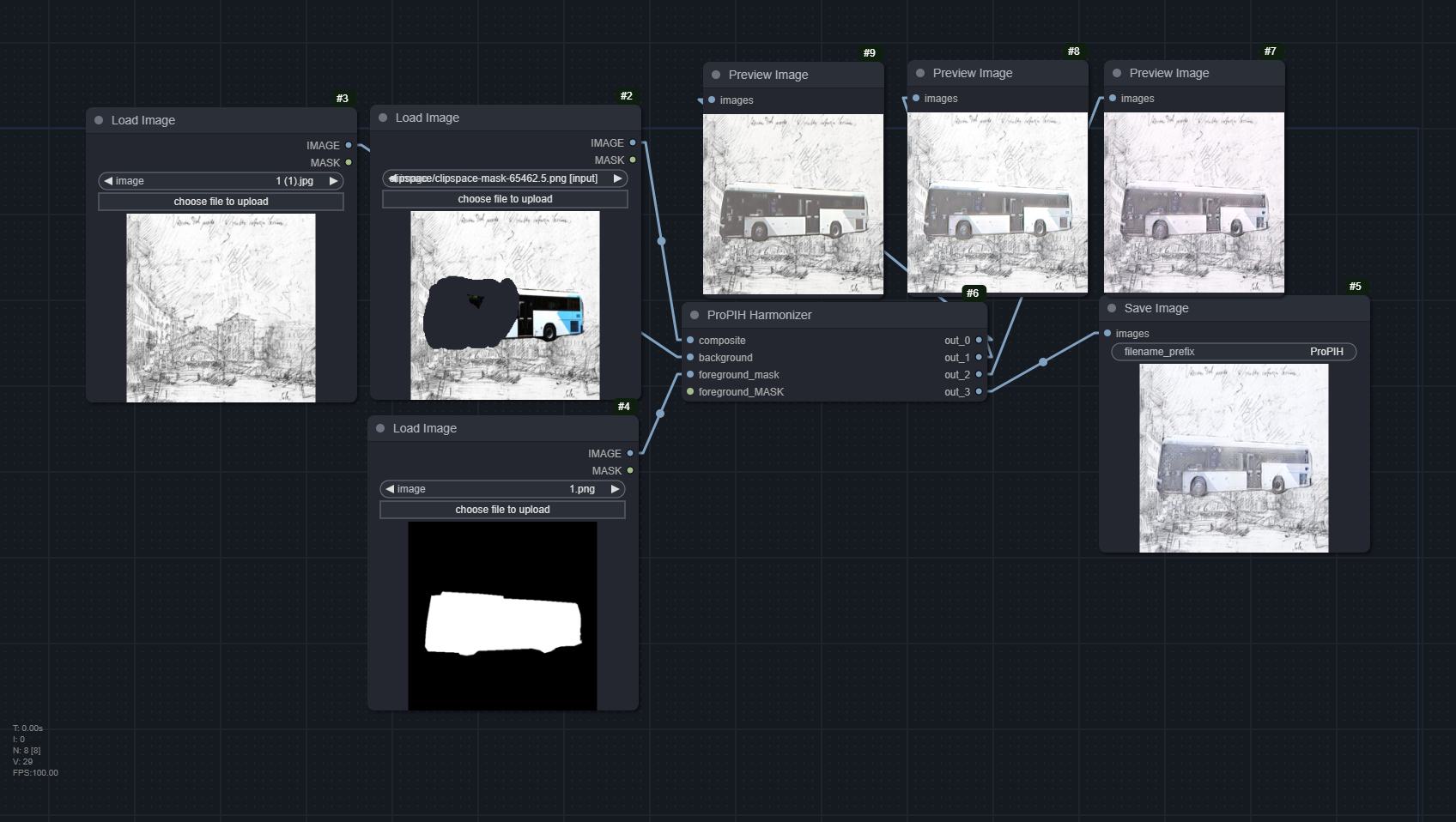

ProPIH

Download the vgg_normalised.pth model in the Installation section and latest_net_G.pth in the Train/Test section

models/propih/vgg_normalised.pth

models/propih/latest_net_G.pth

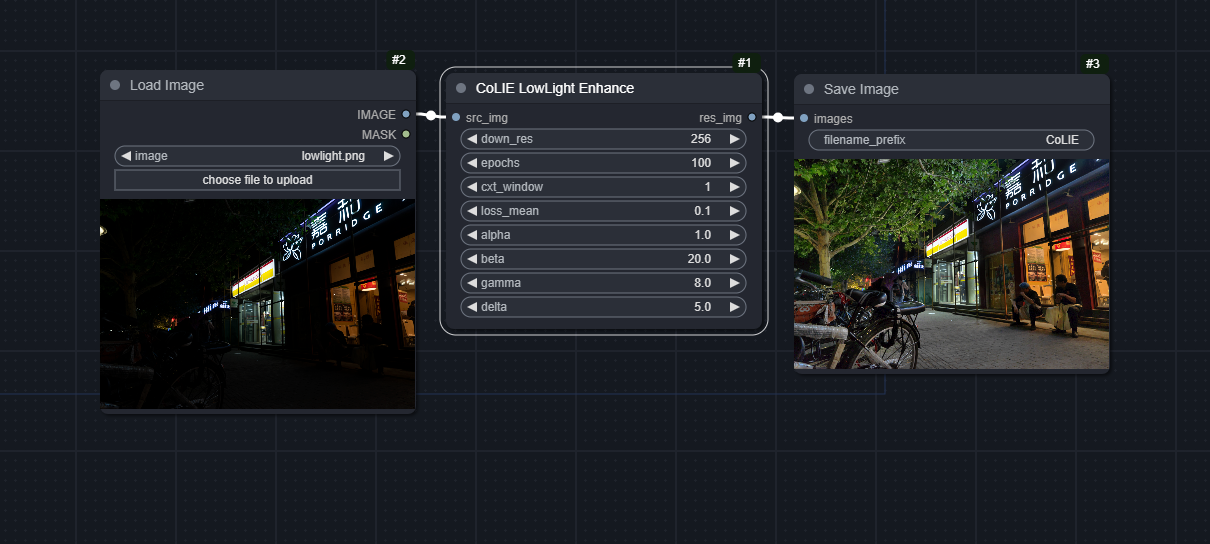

CoLIE

No model needed to be downloaded. Lower loss_mean seems to result in brighter images. Node works with image and batched/video.

VFIMamba

Download the models from the huggingface page

models/vfimamba/VFIMamba_S.pkl

models/vfimamba/VFIMamba.pkl

You will need to install mamba-ssm, which does not have a prebuilt Windows binary. You will need:

- triton. Prebuilt for

Python 3.10 and 3.11can be found here: https://github.com/triton-lang/triton/issues/2881 - https://huggingface.co/madbuda/triton-windows-builds/tree/main - causal-conv1d. Follow this post: https://github.com/NVlabs/MambaVision/issues/14#issuecomment-2232581078

- mamba-ssm. Follow this tutorial: https://blog.csdn.net/yyywxk/article/details/140420538. Fork that followed all the steps: https://github.com/FuouM/mamba-windows-build

I've built mamba-ssm for Python 3.11, torch 2.3.0+cu121, which can be obtained here: https://huggingface.co/FuouM/mamba-ssm-windows-builds/tree/main

To install, pip install [].whl

(Not a workflow-embedded image)

https://github.com/user-attachments/assets/be263cc3-a104-4262-899b-242e9802719e

VFIMamba Example (top: Original, bottom: 5X, 20FPS)

ConvIR

Download models in the Pretrained models - gdrive section

models\convir

│ deraining.pkl

│

├─defocus

│ dpdd-base.pkl

│ dpdd-large.pkl

│ dpdd-small.pkl

│

├─dehaze

│ densehaze-base.pkl

│ densehaze-small.pkl

│ gta5-base.pkl

│ gta5-small.pkl

│ haze4k-base.pkl

│ haze4k-large.pkl

│ haze4k-small.pkl

│ ihaze-base.pkl

│ ihaze-small.pkl

│ its-base.pkl

│ its-small.pkl

│ nhhaze-base.pkl

│ nhhaze-small.pkl

│ nhr-base.pkl

│ nhr-small.pkl

│ ohaze-base.pkl

│ ohaze-small.pkl

│ ots-base.pkl

│ ots-small.pkl

│

├─desnow

│ csd-base.pkl

│ csd-small.pkl

│ snow100k-base.pkl

│ snow100k-small.pkl

│ srrs-base.pkl

│ srrs-small.pkl

│

└─modeblur

convir_gopro.pkl

convir_rsblur.pkl

StabStitch

Download all 3 models in the Code - Pre-trained model section.

models/stabstitch/temporal_warp.pth

models/stabstitch/spatial_warp.pth

models/stabstitch/smooth_warp.pth

Use interpolate_mode = NORMAL or do_linear_blend = True to eliminate dark borders. Inputs will be resized to 360x480. Recommends using StabStitch Crop Resize.

| StabStitch | StabStitch Stabilize |

|-|-|

| stabstitch_stitch.json (Example videos in examples\stabstitch) | stabstich_stabilize.json |

|  |

|  |

|

(Not workflow-embedded images)

Credits

@misc{chobola2024fast,

title={Fast Context-Based Low-Light Image Enhancement via Neural Implicit Representations},

author={Tomáš Chobola and Yu Liu and Hanyi Zhang and Julia A. Schnabel and Tingying Peng},

year={2024},

eprint={2407.12511},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2407.12511},

}

@misc{zhang2024vfimambavideoframeinterpolation,

title={VFIMamba: Video Frame Interpolation with State Space Models},

author={Guozhen Zhang and Chunxu Liu and Yutao Cui and Xiaotong Zhao and Kai Ma and Limin Wang},

year={2024},

eprint={2407.02315},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2407.02315},

}

@article{cui2024revitalizing,

title={Revitalizing Convolutional Network for Image Restoration},

author={Cui, Yuning and Ren, Wenqi and Cao, Xiaochun and Knoll, Alois},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2024},

publisher={IEEE}

}

@inproceedings{cui2023irnext,

title={IRNeXt: Rethinking Convolutional Network Design for Image Restoration},

author={Cui, Yuning and Ren, Wenqi and Yang, Sining and Cao, Xiaochun and Knoll, Alois},

booktitle={International Conference on Machine Learning},

pages={6545--6564},

year={2023},

organization={PMLR}

}

@article{nie2024eliminating,

title={Eliminating Warping Shakes for Unsupervised Online Video Stitching},

author={Nie, Lang and Lin, Chunyu and Liao, Kang and Zhang, Yun and Liu, Shuaicheng and Zhao, Yao},

journal={arXiv preprint arXiv:2403.06378},

year={2024}

}