Nodes Browser

ComfyDeploy: How Ollama Prompt Encode works in ComfyUI?

What is Ollama Prompt Encode?

A prompt generator and CLIP encoder using AI provided by Ollama.

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

Ollama Prompt Encodeand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

ComfyUI Ollama Prompt Encode

A prompt generator and CLIP encoder using AI provided by Ollama.

Prerequisites

Install Ollama and have the service running.

This node has been tested with ollama version 0.4.6.

Installation

Choose one of the following methods to install the node:

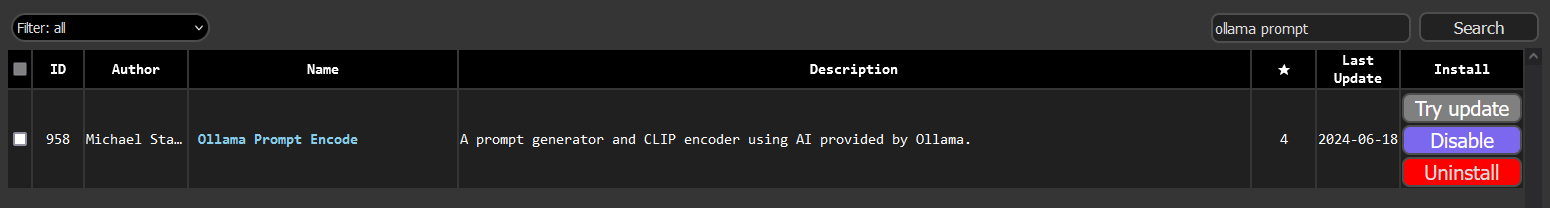

via ComfyUI Manager

If you have the ComfyUI Manager installed, you can install the node from the Install Custom Nodes.

Search for Ollama Prompt Encode and click Install.

via Comfy CLI

If you have the Comfy CLI installed, you can install the node from the command line.

comfy node registry-install comfyui-ollama-prompt-encode

The registry instance can be found on (registry.comfy.org)[https://registry.comfy.org/publishers/michaelstanden/nodes/comfyui-ollama-prompt-encode].

via Git

Clone this repository into your <comfyui>/custom_nodes directory.

cd <comfyui>/custom_nodes

git clone https://github.com/ScreamingHawk/comfyui-ollama-prompt-encode

Usage

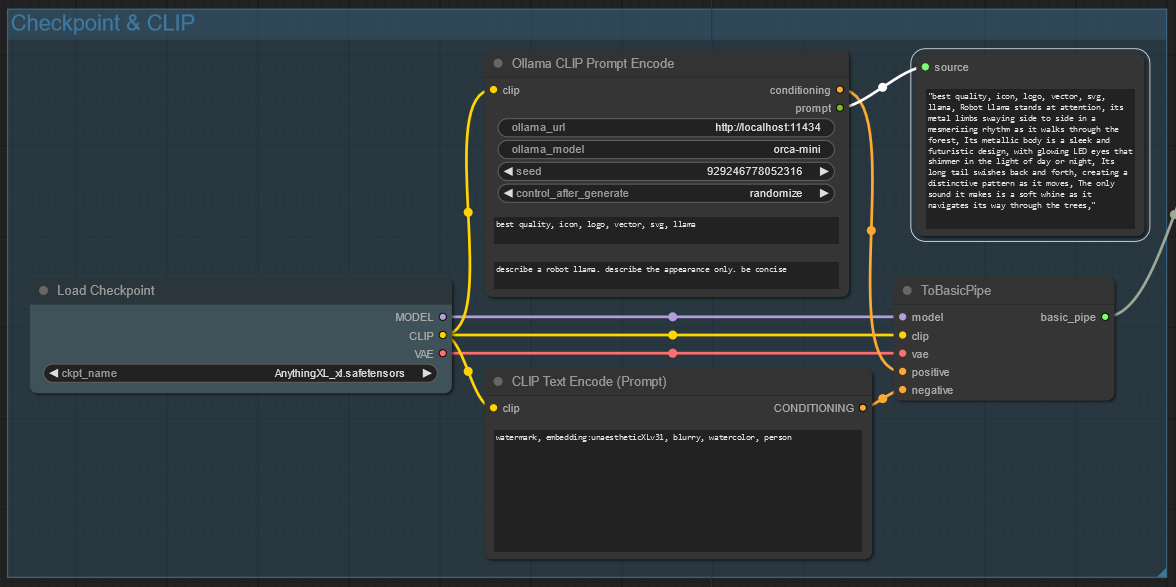

The Ollama CLIP Prompt Encode node is designed to replace the default CLIP Text Encode (Prompt) node. It generates a prompt using the Ollama AI model and then encodes the prompt with CLIP.

The node will output the generated prompt as a string. This can be viewed with rgthree's Display Any node.

An example workflow is available in the docs folder.

Ollama URL

The URL to the Ollama service. The default is http://localhost:11434.

Ollama Model

This is the model that is used to generate your prompt.

Some models that work well with this prompt generator are:

orca-minimistraltinyllama

The node will automatically download the model if it is not already present on your system.

Smaller models are recommended for faster generation times.

Seed

The seed that will be used to generate the prompt. This is useful for generating the same prompt multiple times or ensuring a different prompt is generated each time.

Prepend Tags

A string that will be prepended to the generated prompt.

This is useful for models like pony that work best with extra tags like score_9, score_8_up.

Text

The text that will be used by the AI model to generate the prompt.

Comma Separated Response

If checked, the node will generate a prompt with a high number of tags separated by commas. e.g. young girl, photorealistic, blue hair. This is better for models that work better with more tags like pony.

If unchecked, the node will generate a prompt with a more descriptive prompt. e.g. A photorealistic image of a young girl with blue hair. This is better for models that work better with more descriptive prompts like Flux.

Testing

Run the tests with:

python -m unittest

Credits

This software is provided under the MIT License so it's free to use so long as you give me credit.