Nodes Browser

ComfyDeploy: How ComfyUI-AutoLabel works in ComfyUI?

What is ComfyUI-AutoLabel?

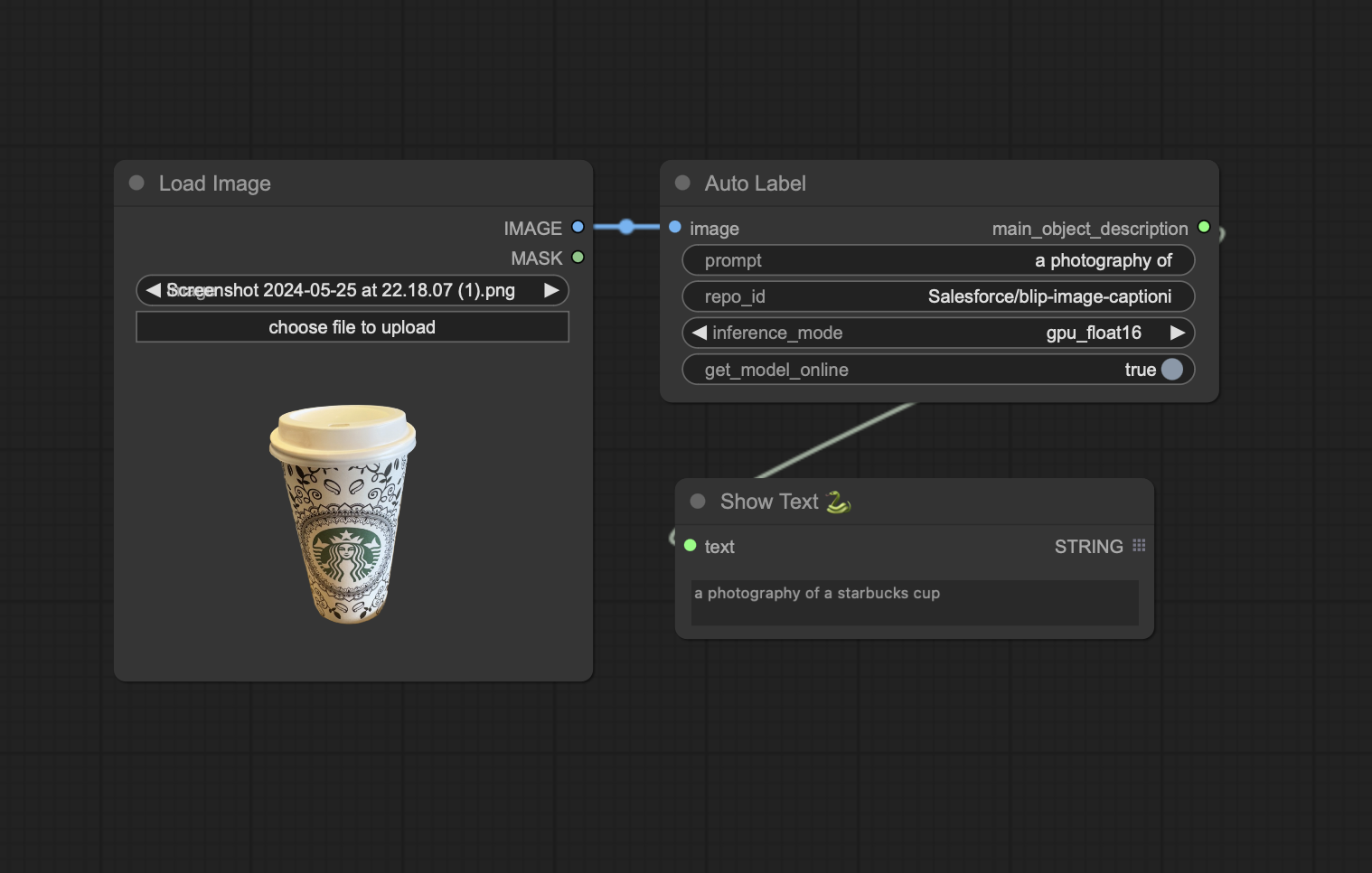

ComfyUI-AutoLabel is a custom node for ComfyUI that uses BLIP (Bootstrapping Language-Image Pre-training) to generate detailed descriptions of the main object in an image. This node leverages the power of BLIP to provide accurate and context-aware captions for images.

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

ComfyUI-AutoLabeland select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

ComfyUI-AutoLabel

ComfyUI-AutoLabel is a custom node for ComfyUI that uses BLIP (Bootstrapping Language-Image Pre-training) to generate detailed descriptions of the main object in an image. This node leverages the power of BLIP to provide accurate and context-aware captions for images.

Features

- Image to Text Description: Generate detailed descriptions of the main object in an image.

- Customizable Prompts: Provide your own prompt to guide the description generation.

- Flexible Inference Modes: Supports GPU, GPU with float16, and CPU inference modes.

- Offline Mode: Option to download and use models offline.

Installation

-

Clone the Repository: Clone this repository into your

custom_nodesfolder in ComfyUI.git clone https://github.com/fexploit/ComfyUI-AutoLabel custom_nodes/ComfyUI-AutoLabel -

Install Dependencies: Navigate to the cloned folder and install the required dependencies.

cd custom_nodes/ComfyUI-AutoLabel pip install -r requirements.txt

Usage

Adding the Node

- Start ComfyUI.

- Add the

AutoLabelnode from the custom nodes list. - Connect an image input and configure the parameters as needed.

Parameters

image(required): The input image tensor.prompt(optional): A string to guide the description generation (default: "a photography of").repo_id(optional): The Hugging Face model repository ID (default: "Salesforce/blip-image-captioning-base").inference_mode(optional): The inference mode, can be "gpu_float16", "gpu", or "cpu" (default: "gpu").get_model_online(optional): Boolean flag to download the model online if not already present (default: True).

Contributing

Contributions are welcome! Please open an issue or submit a pull request with your changes.

License

This project is licensed under the MIT License.

Acknowledgements

Contact

For any inquiries, please open an issue on the GitHub repository.