Nodes Browser

ComfyDeploy: How ComfyUI Griptape Nodes works in ComfyUI?

What is ComfyUI Griptape Nodes?

This repo creates a series of nodes that enable you to utilize the [a/Griptape Python Framework](https://github.com/griptape-ai/griptape/) with ComfyUI, integrating AI into your workflow. This repo creates a series of nodes that enable you to utilize the Griptape Python Framework with ComfyUI, integrating AI into your workflow.

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

ComfyUI Griptape Nodesand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

ComfyUI Griptape Nodes

This repo creates a series of nodes that enable you to utilize the Griptape Python Framework with ComfyUI, integrating LLMs (Large Language Models) and AI into your workflow.

Instructions and tutorials

Watch the trailer and all the instructional videos on our YouTube Playlist.

The repo currently has a subset of Griptape nodes, with more to come soon. Current nodes can:

-

Create Agents that can chat using these models:

- Local - via Ollama and LM Studio

- Llama 3

- Mistral

- etc..

- Via Paid API Keys

- OpenAI

- Azure OpenAI

- Amazon Bedrock

- Cohere

- Google Gemini

- Anthropic Claude

- Hugging Face (Note: Not all models featured on the Hugging Face Hub are supported by this driver. Models that are not supported by Hugging Face serverless inference will not work with this driver. Due to the limitations of Hugging Face serverless inference, only models that are than 10GB are supported.)

- Local - via Ollama and LM Studio

-

Control agent behavior and personality with access to Rules and Rulesets.

-

Give Agents access to Tools:

-

Run specific Agent Tasks:

-

Generate Images using these models:

- OpenAI

- Amazon Bedrock Stable Diffusion

- Amazon Bedrock Titan

- Leonardo.AI

-

Audio

- Transcribe Audio

- Text to Voice

Ultimate Configuration

Use nodes to control every aspect of the Agents behavior, with the following drivers:

- Prompt Driver

- Image Generation Driver

- Embedding Driver

- Vector Store Driver

- Text to Speech Driver

- Audio Transcription Driver

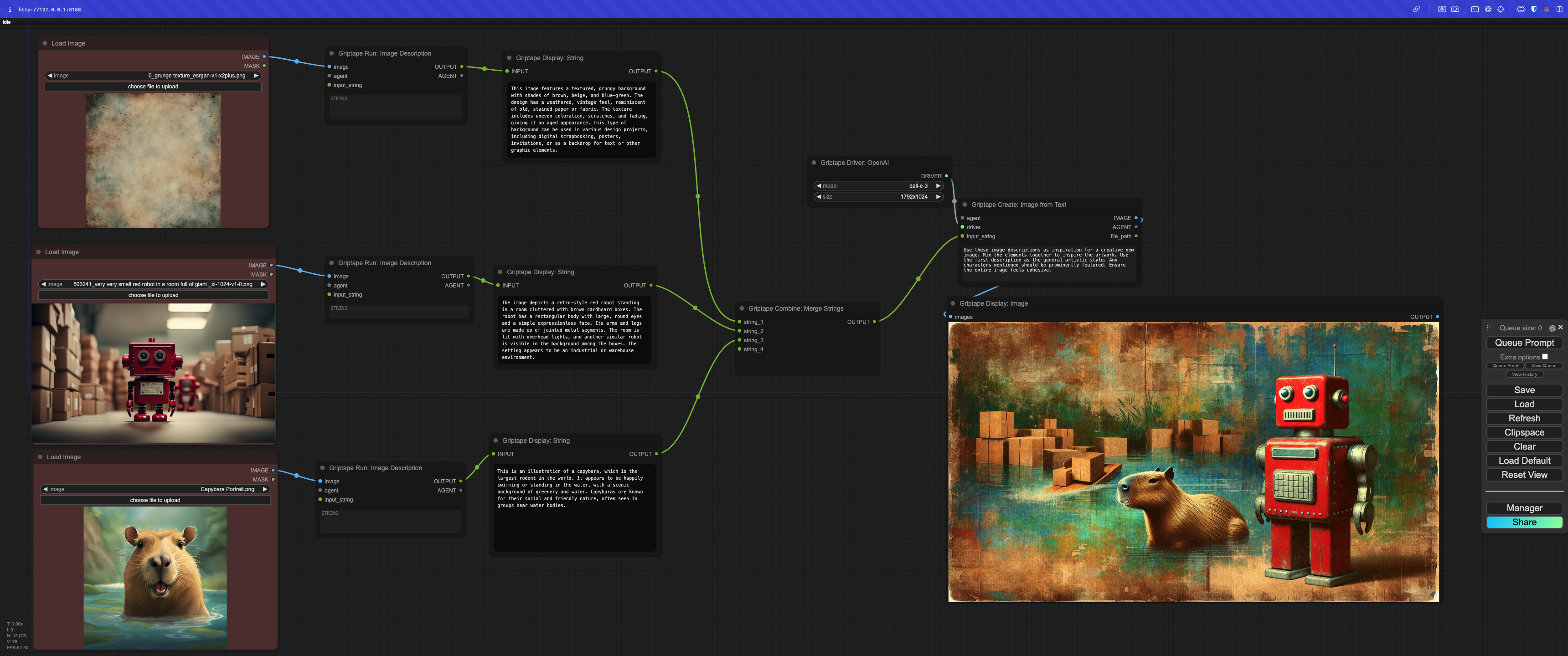

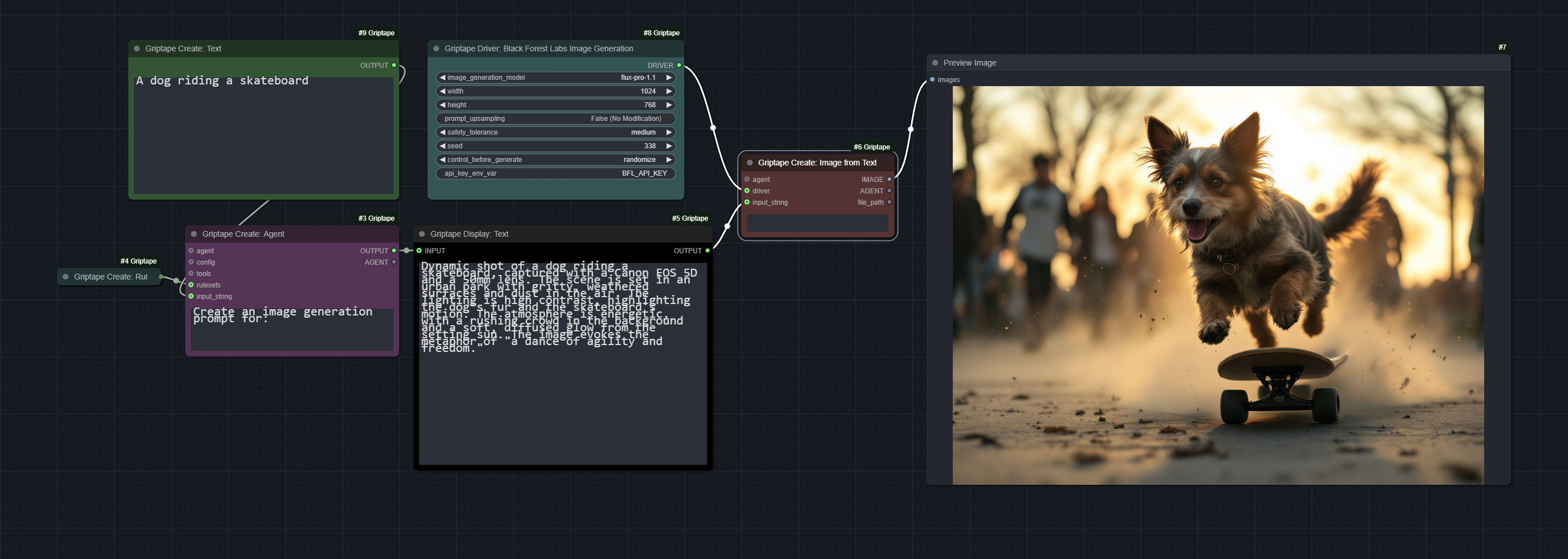

Example

In this example, we're using three Image Description nodes to describe the given images. Those descriptions are then Merged into a single string which is used as inspiration for creating a new image using the Create Image from Text node, driven by an OpenAI Driver.

The following image is a workflow you can drag into your ComfyUI Workspace, demonstrating all the options for configuring an Agent.

More examples

You can previous and download more examples here.

Using the nodes - Video Tutorials

- Installation: https://youtu.be/L4-HnKH4BSI?si=Q7IqP-KnWug7JJ5s

- Griptape Agents: https://youtu.be/wpQCciNel_A?si=WF_EogiZRGy0cQIm

- Controlling which LLM your Agents use: https://youtu.be/JlPuyH5Ot5I?si=KMPjwN3wn4L4rUyg

- Griptape Tools - Featuring Task Memory and Off-Prompt: https://youtu.be/TvEbv0vTZ5Q

- Griptape Rulesets, and Image Creation: https://youtu.be/DK16ouQ_vSs

- Image Generation with multiple drivers: https://youtu.be/Y4vxJmAZcho

- Image Description, Parallel Image Description: https://youtu.be/OgYKIluSWWs?si=JUNxhvGohYM0YQaK

- Audio Transcription: https://youtu.be/P4GVmm122B0?si=24b9c4v1LWk_n80T

- Using Ollama as a Configuration Driver: https://youtu.be/jIq_TL5xmX0?si=ilfomN6Ka1G4hIEp

- Combining Rulesets: https://youtu.be/3LDdiLwexp8?si=Oeb6ApEUTqIz6J6O

- Integrating Text: https://youtu.be/_TVr2zZORnA?si=c6tN4pCEE3Qp0sBI

- New Nodes & Quality of life improvements: https://youtu.be/M2YBxCfyPVo?si=pj3AFAhl2Tjpd_hw

- Merge Input Data: https://youtu.be/wQ9lKaGWmZo?si=FgboU5iUg82pXRkC

- Setting default agent configurations: https://youtu.be/IkioCcldEms?si=4uUu-y9UvIJWVBdE

- Merge Text with dynamic inputs and custom separator: https://youtu.be/1fHAzKVPG4M?si=6JHe1NA2_a_nl9rG

- Multiple Image Descriptions and Local Multi-Modal Models: https://youtu.be/KHz7CMyOk68?si=oQXud6NOtNHrXLez

- WebSearch Node Now Allows for Driver Functionality in Griptape Nodes: https://youtu.be/4_dkfdVUnRI?si=DA4JvegV0mdHXPDP

- Persistent Display Text: https://youtu.be/9229bN0EKlc?si=Or2eu3Nuh7lxgfEU

- Convert an Agent to a Tool.. and give it to another Agent: https://youtu.be/CcRot5tVAU8?si=lA0v5kDH51nYWwgG

- Text-To-Speech Nodes: https://youtu.be/PP1uPkRmvoo?si=QSaWNCRsRaIERrZ4

- Update to Managing Your API Keys in ComfyUI Griptape Nodes: https://www.youtube.com/watch?v=v80A7rtIjko

Recent Changelog

Dec 21, 2024

- Fixed issue where Griptape Agent Config: Custom Structure node was still requiring OPENAI_API_KEY.

- Updated to Griptape v1.0.2

- OpenAi, Anthropic, and Ollama nodes pull directly from their apis now to get the available models.

- Added check to ensure Ollama not running doesn't cause Griptape Nodes to fail.

Dec 12, 2024

- Updated to Griptape Framework 1.0!

- Added check for BlackForest install issues to not block Griptape Nodes running

Nov 30, 2024

-

New Nodes:

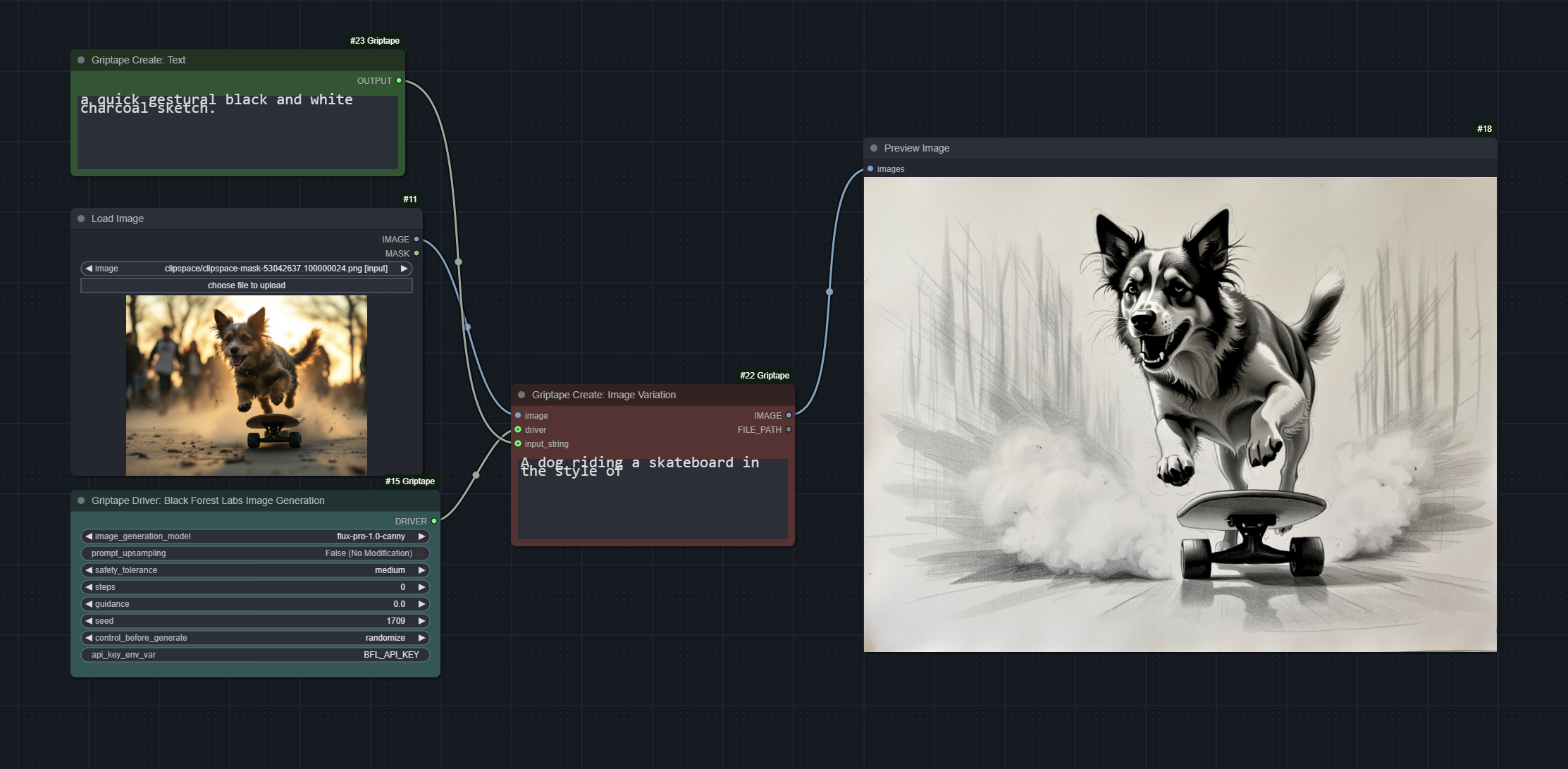

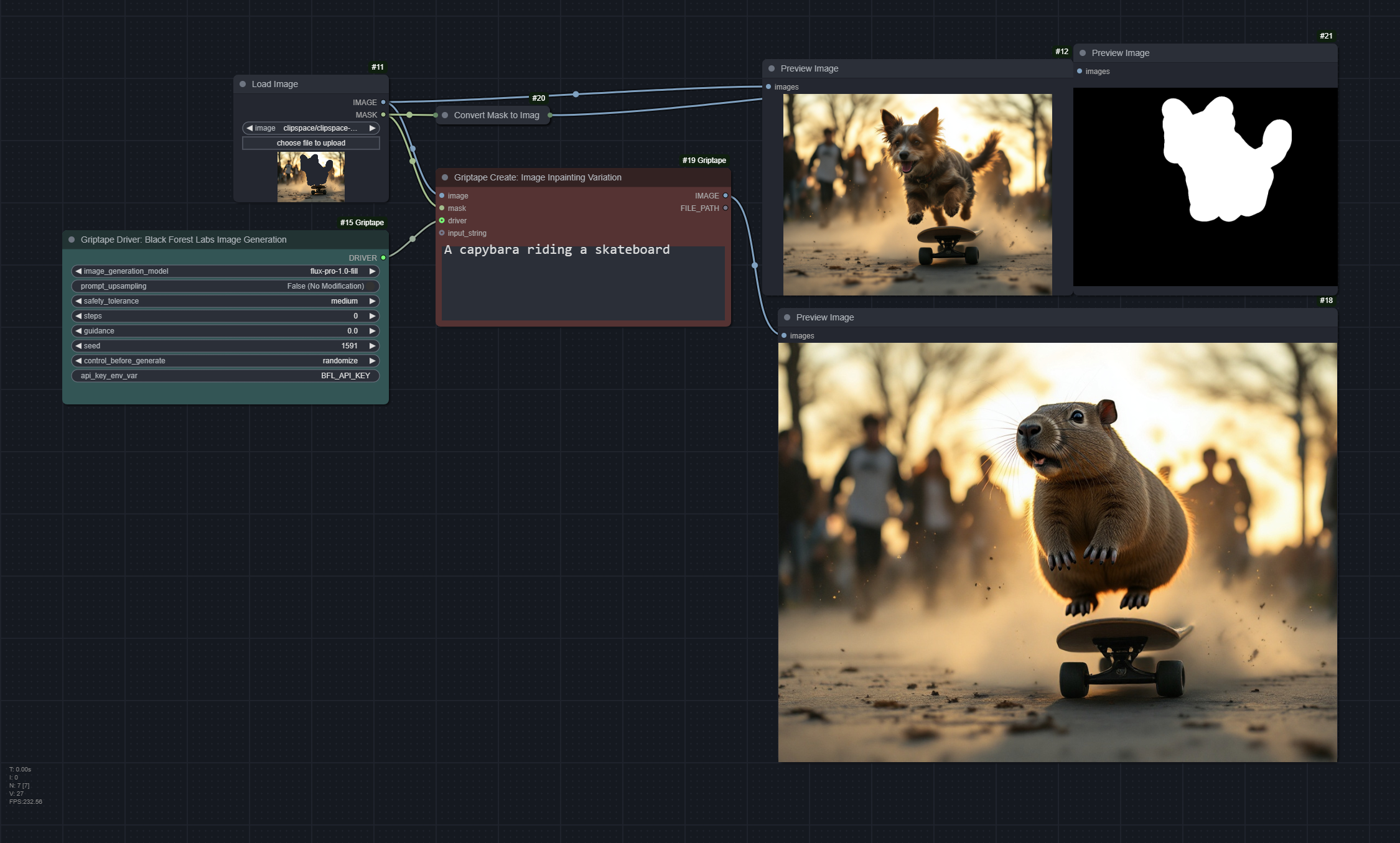

Griptape Driver: Black Forest Labs Image Generation- Now generate images with the incredible Flux models -flux-pro-1.1,flux-pro,flux-dev, andflux-pro-1.1-ultra.- Requires an API_KEY from Black Forest Labs (https://docs.bfl.ml/)

- Utilizes new Griptape Extension: https://github.com/griptape-ai/griptape-black-forest

- It also works with the

Griptape Create: Image Variationnode.

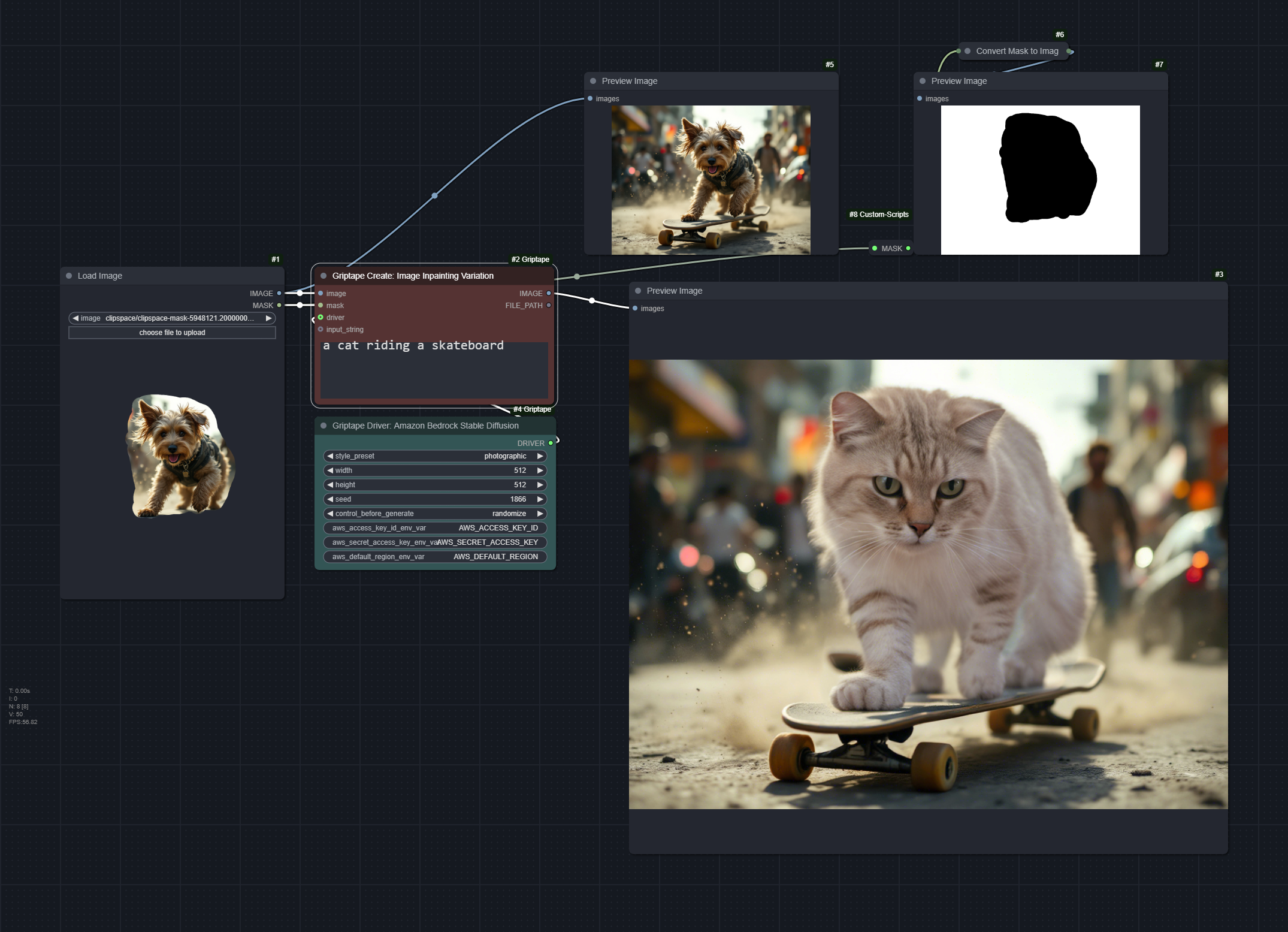

Griptape Create: Image Inpainting Variationto the Griptape -> Image menu. Gives the ability to paint a mask and replace that part of the image.

Nov 29, 2024

- Iterating on configuration settings to improve compatibility with ComfyUI Desktop

Nov 28, 2024

- ⚠️ Temporarily removed BlackForestLabs Driver nodes while resolving install issues. There appears to be an installation issue for these nodes, so I'm temporarily removing them until it's resolved.

- Removed old configuration settings - now relying completely on ComfyUI's official settings

Nov 27, 2024

-

Added example: PDF -> Profile Pic where a resume in pdf form is summarized, then used as inspiration for an image generation prompt to create a profile picture.

-

Fixed:

gtUIKnowledgeBaseToolwas breaking if a Griptape Cloud Knowledge Base had an_in the name. It now handles that situation.

Nov 26, 2024

-

Upgrade to Griptape Framework v0.34.3

-

New Nodes:

Griptape Create: Image Inpainting Variationto the Griptape -> Image menu. Gives the ability to paint a mask and replace that part of the image.

Griptape Run: Task- Combines/ReplacesGriptape Run: Prompt Task,Griptape Run: Tool Task, andGriptape Run Toolkit Taskinto a single node that knows what to do.Griptape Run: Text Extractionto the Griptape -> Text menu

-

Added

keep_aliveparameter toOllama Prompt Driverto give the user the ability to control how long to keep the model running. Setting it to 0 will do the same as anollama stop <model>command-line execution. Default setting is 240 seconds to match the current default. -

Moved node:

Griptape Run: Text Summaryto the Griptape -> Text menu -

Updated

Griptape RAG Retrieve: Text Loader Moduleto take a file input or text input. -

Fixed ExtractionTool to use a default of

gpt-4o-mini -

Added some text files for testing text loading

-

Added Examples to Examples Readme

Nov 9, 2024

- Upgrade to Griptape Framework v0.34.2

- Fixed combine nodes breaking when re-connecting output

Nov 6, 2024

-

Upgrade to Griptape Framework v0.34.1

- Fix to

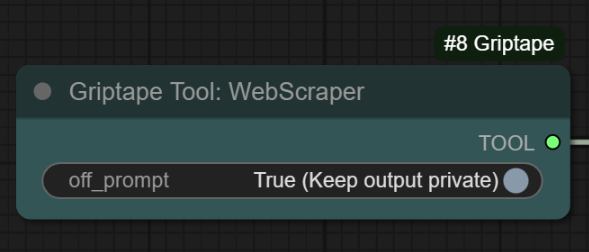

WebScraperToolprovides better results when usingoff_prompt.

- Fix to

-

Fixed bug where urls were dropping any text after the

:. Example: "What is https://griptape.ai" was being converted to "What is https:". This is due to thedynamicpromptfunctionality of ComfyUI, so I've disabled that. -

Added context string to all BOOLEAN parameters to give the user a better idea as to what the particular boolean option does. For example, intead of just

TrueorFalse, the tools now explainoff_prompt.

Nov 4, 2024

- Fixed bug where OPENAI_API_KEY was still required, and was causing some install issues.

- Added video to README with how to manage api keys.

Nov 1, 2024

-

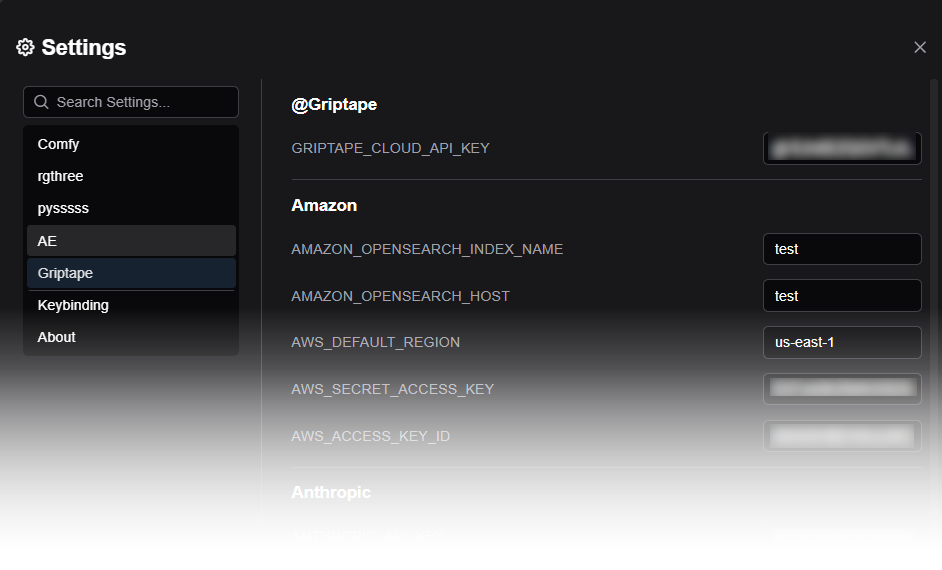

Major reworking of how API keys are set. Now you can use the ComfyUI Settings window and add your API keys there. This should simplify things quite a bit as you no longer need to create a

.envfile in your ComfyUI folder.- Note: Existing environment variables will be picked up automatically.

Oct 31, 2024

- Added tooltips for all drivers to help clarify properties

- Added fix for Ollama Driver Config so it wouldn't fail if no embedding driver was specified.

- Fix for Convert Agent to Tool node.

Oct 30, 2024

- Updated to Griptape Framework v0.34.0

- Breaking Changes

-

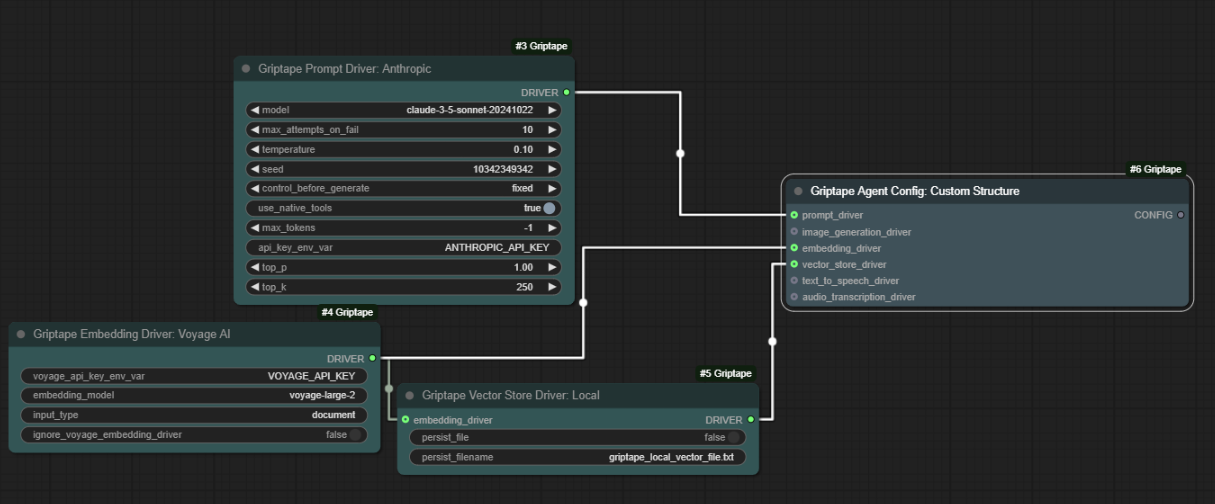

AnthropicDriversConfignode no longer includes Embedding Driver. If you wish to use Claude within a RAG pipeline, build aConfig: Custom Structureusing a Prompt Driver, Embedding Driver, and Vector Store Driver. See the attached image for an example:

-

Oct 23, 2024

- Updated Anthropic Claude Prompt Driver to include

claude-3-5-sonnet-20241022 - Updated Anthropic Claude Config to offer option to not use Voyage API for Embedding Driver. Just set

ignore_voyage_embedding_drivertoTrue

Oct 11, 2024

- Updated to Griptape Framework v0.33.1 to resolve install bugs

Oct 10, 2024

- Updated to Griptape Framework v0.33

- Added

TavilyWebSearchDriver. Requires a Tavily api key. - Added

ExaWebSearchDriver. Requires an Exa api key.

Sept 20, 2024

- Hotfix for

Griptape Agent Config: LM Studio Drivers. Thebase_urlparameter wasn't being set properly causing a connection error.

Sept 12, 2024

- Hotfix for

Griptape Run: Tool Tasknode. It now properly handles the output of the tool being a list.

Sept 11, 2024

- Added

top_pandtop_kto Anthropic and Google Prompt Drivers - Fixed automatic display node text resizing

- Fixed missing display of the Env node

Sept 10, 2024

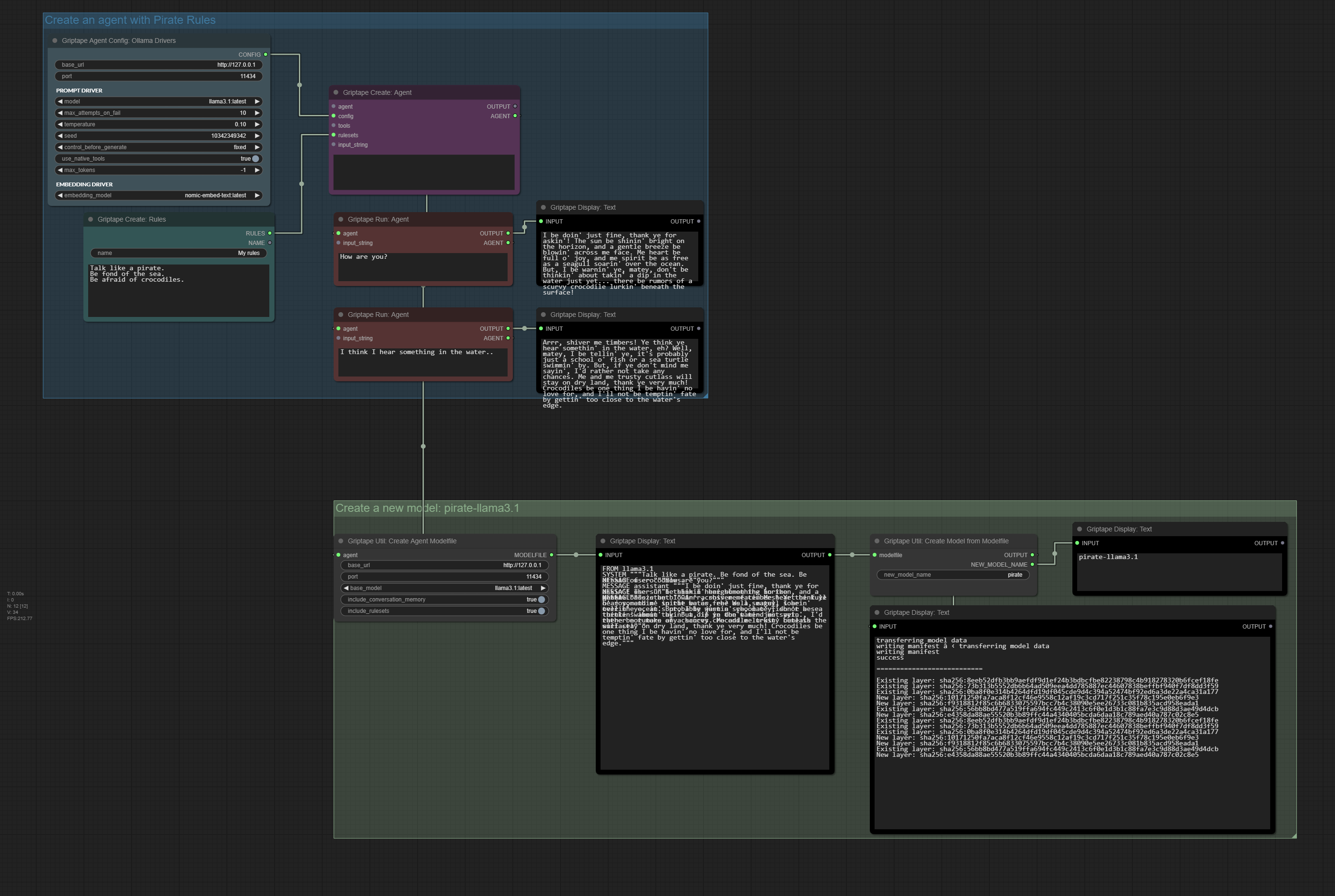

-

New Nodes Griptape now has the ability to generate new models for

Ollamaby creating a Modelfile. This is an interesting technique that allows you to create new models on the fly.Griptape Util: Create Agent Modelfile. Given an agent with rules and some conversation as an example, create a new Ollama Modelfile with a SYSTEM prompt (Rules), and MESSAGES (Conversation).Griptape Util: Create Model from Modelfile. Given a Modelfile, create a new Ollama model.Griptape Util: Remove Ollama Model. Given an Ollama model name, remove the model from Ollama. This will help you cleanup unnecessary models. Be Careful with this one, as there is no confirmation step!

Sept 5, 2024

MAJOR UPDATE

-

Update to Griptape Framework to v0.31.0

-

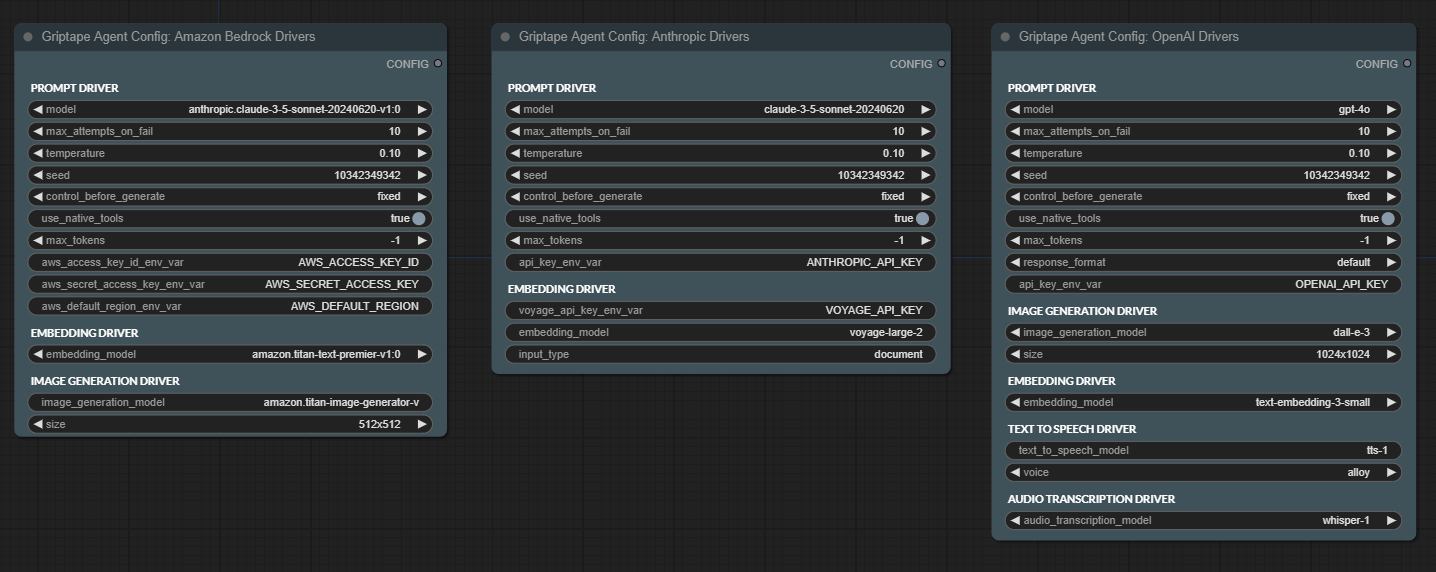

There are some New Configuration Drivers nodes! These new nodes replace the previous

Griptape Agent Confignodes (which still exist, but have been deprecated). They display the various drivers that are available for each general config, and allow you to make changes per driver. See the image for examples:

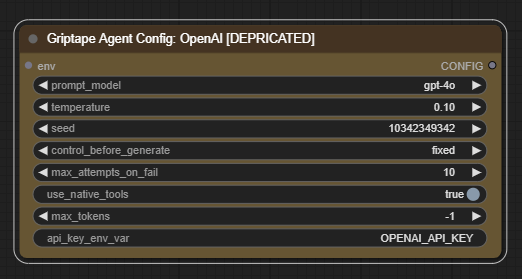

- Old

Griptape Agent Confignodes still exist, but have been deprecated. They will be removed in a future release. Old workflows should automatically display the older nodes as deprecated. It's highly recommended to replace these old nodes with the new ones. I have tried to minimize breaking nodes, but if some may exist. I appologize for this if it happens.

- New Nodes

Griptape Agent Config: Cohere Drivers: A New Cohere node.Griptape Agent Config: Expand: A node that lets you expand Config Drivers nodes to get to their individual drivers.Griptape RAG Nodesa whole new host of nodes related to Retrieval Augmented Generation (RAG). I've included a sample in the examples folder that shows how to use these nodes.

Griptape RAG: Tool- A node that lets you create a tool for RAG.Griptape RAG: Engine- A node that lets you create an engine for RAG containing multiple stages. Learn more here: https://docs.griptape.ai/stable/griptape-framework/engines/rag-engines/:- Query stage - a stage that allows you to manipulate a user's query before RAG starts.

- Retrieval stage - the stage where you gather the documenents and vectorize them. This stage can contain multiple "modules" which can be used to gather documents from different sources.

- Rerank stage - a stage that re-ranks the results from the retrieval stage.

- Response stage - a stage that uses a prompt model to generate a response to the user's question. It also includes multiple modules.

Griptape Combine: RAG Module List- A node that lets you combine modules for a stage.- Various Modules:

Griptape RAG Query: Translate Module- A module that translates the user's query into another language.Griptape RAG Retrieve: Text Loader Module- A module that lets you load text and vectorize it in real time.Griptape RAG Retrieve: Vector Store Module- A module that lets you load text from an existing Vector Store.Griptape RAG Rerank: Text Chunks Module- A module that re-ranks the text chunks from the retrieval stage.Griptape RAG Response: Prompt Module- Uses an LLM Prompt Driver to generate a response.Griptape RAG Response: Text Chunks Module- Just responds with Text Chunks.Griptape RAG Response: Footnote Prompt Module- A Module that ensures proper footnotes are included in the response.

Aug 30, 2024

- Added

max_tokensto most configuration and prompt_driver nodes. This gives you the ability to control how many tokens come back from the LLM. Note: It's a known issue that AmazonBedrock doesn't work with max_tokens at the moment. - Added

Griptape Tool: Extractionnode that lets you extract either json or csv text with either a json schema or column header definitions. This works well with TaskMemory. - Added

Griptape Tool: Prompt Summarynode that will summarize text. This works well with TaskMemory.

Aug 29, 2024

- Updated griptape version to 0.30.2 - This is a major change to how Griptape handles configurations, but I tried to ensure all nodes and workflows still work. Please let us know if there are any issues.

- Added

Griptape Tool: Querynode to allow Task Memory to go "Off Prompt"

Aug 27, 2024

- Fixed bugs where inputs of type "*" weren't working

- Updated frontend display of type

stringforGriptape Display: Data as Textnode.

Aug 21, 2024

- Fixed querying for models in LMStudio and Ollama on import

Aug 20, 2024

- Update Griptape Framework to v029.2

- Modified ImageQueryTask to switch to a workflow if more than 2 images are specified

- Updated tests

Aug 4, 2024

- Updating Griptape Framework to v029.1

- Added

Griptape Config: Environment Variablesnode to allow you to add environment variables to the graph - Added

Griptape Text: Loadnode to load a text file from disk - Added Ollama Embedding Model

- Added GriptapeCloudKnowledgeBaseVectorStoreDriver that allows you to query a knowledge base in Griptape Cloud. Requires a Griptape Cloud account (https://cloud.griptape.ai), a Data Source, and a Knowledge Base. Also requires an API key:

GRIPTAPE_CLOUD_API_KEYthat you can get from your Griptape Cloud API Page.

Aug 3, 2024

- Reverted ollama and lmstudio configuration nodes to a list of installed models using new method for grabbing them.

July 29, 2024

- Temporarily replaceing the ollama config nodes with a string input for specifying the model instead of a list of installed models.

July 27, 2024

- Updated menu items to be in a better order. Please provide feedback!

July 25, 2024

- Added separators to menu items in the RMB->Griptape menu to help group similar items.

July 24, 2024

-

Added default colors to help differentiate between types of nodes. Tried to keep it minimal and distinct.

-

Agent support nodes (Rules, Tools, Drivers, Configurations):

BlueRationale: Blue represents stability and foundational elements. Using it for all agent-supporting nodes shows their interconnected nature. -

Agents:

PurpleRationale: Purple often represents special or unique elements. This makes Agents stand out as the central, distinct entities in the system. -

Tasks:

RedRationale: Red signifies important actions, fitting for task execution nodes. -

Output nodes:

BlackRationale: Black provides strong contrast, suitable for final output display. -

Utility nodes (Merge, Conversion, Text creation, Loaders): No color (

gray) Rationale: Keeping utility functions in a neutral color helps reduce visual clutter and emphasizes their supporting role.

-

-

New Node SaveText. This is a simple SaveText node as requested by a user. Please check it out and give feedback.

July 23, 2024

- Fixed bug with VectorStoreDrivers that would cause ComfyUI to fail loading if no OPENAI_API_KEY was present.

July 22, 2024

-

New Nodes A massive amount of new nodes, allowing for ultimate configuration of an Agent.

-

Griptape Agent Configuration

- Griptape Agent: Generic Structure - A Generic configuration node that lets you pick any combination of

prompt_driver,image_generation_driver,embedding_driver,vector_store_driver,text_to_speech_driver, andaudio_transcription_driver. - Griptape Replace: Rulesets on Agent - Gives you the ability to replace or remove rulesets from an Agent.

- Griptape Replace: Tools on Agent - Gives you the ability to replace or remove tools from an Agent

- Griptape Agent: Generic Structure - A Generic configuration node that lets you pick any combination of

-

Drivers

- Prompt Drivers - Unique chat prompt drivers for

AmazonBedrock,Cohere,HuggingFace,Google,Ollama,LMStudio,Azure OpenAi,OpenAi,OpenAiCompatible - Image Generation Drivers - These all existed before, but adding here for visibility:

Amazon Bedrock Stable Diffusion,Amazon Bedrock Titan,Leonardo AI,Azure OpenAi,OpenAi - Embedding Drivers - Agents can use these for generating embeddings, allowing them to extract relevant chunks of data from text.

Azure OpenAi,Voyage Ai,Cohere,Google,OpenAi,OpenAi compatable - Vector Store Drivers - Allows agents to access Vector Stores to query data: ``Azure MongoDB

,PGVector,Pinecone,Amazon OpenSearch,Qdrant,MongoDB Atlas,Redis,Local Vector Store` - Text To Speech Drivers - Gives agents the ability to convert text to speech.

OpenAi,ElevenLabs - Audio Transcription Driver - Gives agents the ability to transcribe audio.

OpenAi - re-fixed spelling of Compatable to Compatible, because it's a common mistake. :)

- Prompt Drivers - Unique chat prompt drivers for

-

Vector Store - New Vector Store nodes -

Vector Store Add Text,Vector Store Query, andGriptape Tool: VectorStoreto allow you to work with various Vector Stores -

Environment Variables parameters - all nodes that require environmetn variables & api keys have those environment variables specified on the nodes. This should make it easier to know what environment variables you want to set in

.env. -

Examples - Example workflows are now available in the

/examplesfolder here.

-

-

Breaking Change

- There is no longer a need for an

ImageQueryDriver, so theimage_query_modelinput has been removed from the configuration nodes. - Due to how comfyUI handles input removal, the values of non-deleted inputs on some nodes may be broken. Please double-check your values on these Configuration nodes.

- There is no longer a need for an

July 17, 2024

- Simplified API Keys by removing requirements for

griptape_config.json. Now all keys are set in.env. - Fixed bug where Griptape wouldn't launch if no

OPENAI_API_KEYwas set.

July 16, 2024

- Reorganized all the nodes so each class is in it's own file. should make things easier to maintain

- Added

max_attemnpts_on_failparameter to all Config nodes to allow the user to determine the number of retries they want when an agent fails. This maps to themax_attemptsparameter in the Griptape Framework. - NewNode: Audio Driver: Eleven Labs. Uses the ElevenLabs api. Takes a model, a voice, and the ELEVEN_LABS_API_KEY. https://elevenlabs.io/docs/voices/premade-voices#current-premade-voices

- NewNode: Griptape Run: Text to Speech task

- NewNode: Added AzureOpenAI Config node. To use this, you'll need to set up your Azure endpoint and get API keys. The two environment variables required are

AZURE_OPENAI_ENDPOINTandAZURE_OPENAI_API_KEY. You will also require a deployment name. This is available in Azure OpenAI Studio - Updated README

July 12, 2024

- Updated to Griptape v0.28.2

- New Node Griptape Config: OpenAI Compatible node. Allows you to connect to services like https://www.ohmygpt.com/ which are compatable with OpenAi's api.

- New Node HuggingFace Prompt Driver Config

- Reorganized a few files

- Removed unused DuckDuckGoTool now that Griptape supports drivers.

July 11, 2024

- The Display Text node no longer clears it's input if you disconnect it - which means you can use it as a way to generate a prompt, and then tweak it later.

- Added Convert Agent to Tool node, allowing you to create agents that have specific skills, and then give them to another agent to use when it feels it's appropriate.

July 10, 2024

- Updated to work with Griptape v0.28.1

- Image Description node now can handle multiple images at once, and works with Open Source llava.

- Fixed tool, config, ruleset, memory bugs for creating agents based on update to v0.28.0

- New Nodes Added WebSearch Drivers: DuckDuckGo and Google Search. To use Google Search, you must have two API keys - GOOGLE_API_KEY and GOOGLE_API_SEARCH_ID.

July 9, 2024

- Updated LMStudio and Ollama config nodes to use 127.0.0.1

- Updated

Create AgentandRun Agentnodes to no longer cache their knowledge between runs. Now if theagentinput isn't connected to anything, it will create a new agent on each run.

July 2, 2024

- All input nodes updated with dynamic inputs. Demonstration here: https://youtu.be/1fHAzKVPG4M?si=6JHe1NA2_a_nl9rG

- Fixed bug with Text to Combo node

Installation

1. ComfyUI

Install ComfyUI using the instructions for your particular operating system.

2. Use Ollama

If you'd like to run with a local LLM, you can use Ollama and install a model like llama3.

-

Download and install Ollama from their website: https://ollama.com

-

Download a model by running

ollama run <model>. For example:ollama run llama3 -

You now have ollama available to you. To use it, follow the instructions in this YouTube video: https://youtu.be/jIq_TL5xmX0?si=0i-myC6tAqG8qbxR

3. Install Griptape-ComfyUI

There are two methods for installing the Griptape-ComfyUI repository. You can either download or git clone this repository inside the ComfyUI/custom_nodes, or use the ComfyUI Manager.

-

Option A - ComfyUI Manager (Recommended)

- Install ComfyUI Manager by following the installation instructions.

- Click Manager in ComfyUI to bring up the ComfyUI Manager

- Search for "Griptape"

- Find the ComfyUI-Griptape repo.

- Click INSTALL

- Follow the rest of the instructions.

-

Option B - Git Clone

-

Open a terminal and input the following commands:

cd /path/to/comfyUI cd custom_nodes git clone https://github.com/griptape-ai/ComfyUI-Griptape

-

4. Make sure libraries are loaded

Libraries should be installed automatically, but if you're having trouble, hopefully this can help.

There are certain libraries required for Griptape nodes that are called out in the requirements.txt file.

griptape[all]

python-dotenv

These should get installed automatically if you used the ComfyUI Manager installation method. However, if you're running into issues, please install them yourself either using pip or poetry, depending on your installation method.

-

Option A - pip

pip install "griptape[all]" python-dotenv -

Option B - poetry

poetry add "griptape[all]" python-dotenv

5. Restart ComfyUI

Now if you restart comfyUI, you should see the Griptape menu when you click with the Right Mouse button.

If you don't see the menu, please come to our Discord and let us know what kind of errors you're getting - we would like to resolve them as soon as possible!

6. Set API Keys

For advanced features, it's recommended to use a more powerful model. These are available from the providers listed bellow, and will require API keys.

-

To set an API key, click on the

Settingsbutton in the ComfyUI Sidebar. -

Select the

Griptapeoption. -

Scroll down to the API key you'd like to set and enter it.

Note: If you already have a particular API key set in your environment, it will automatically show up here.

You can get the appropriate API keys from these respective sites:

- OPENAI_API_KEY: https://platform.openai.com/api-keys

- GOOGLE_API_KEY: https://makersuite.google.com/app/apikey

- AWS_ACCESS_KEY_ID & SECURITY_ACCESS_KEY:

- Open the AWS Console

- Click on your username near the top right and select Security Credentials

- Click on Users in the sidebar

- Click on your username

- Click on the Security Credentials tab

- Click Create Access Key

- Click Show User Security Credentials

- LEONARDO_API_KEY: https://docs.leonardo.ai/docs/create-your-api-key

- ANTHROPIC_API_KEY: https://console.anthropic.com/settings/keys

- VOYAGE_API_KEY: https://dash.voyageai.com/

- HUGGINGFACE_HUB_ACCESS_TOKEN: https://huggingface.co/settings/tokens

- AZURE_OPENAI_ENDPOINT & AZURE_OPENAI_API_KEY: https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/switching-endpoints

- COHERE_API_KEY: https://dashboard.cohere.com/api-keys

- ELEVEN_LABS_API_KEY: https://elevenlabs.io/app/

- Click on your username in the lower left

- Choose Profile + API Key

- Generate and copy the API key

- GRIPTAPE_CLOUD_API_KEY: https://cloud.griptape.ai/configuration/api-keys

Troubleshooting

Torch issues

Griptape does install the torch requirement. Sometimes this may cause problems with ComfyUI where it grabs the wrong version of torch, especially if you're on Nvidia. As per the ComfyUI docs, you may need to unintall and re-install torch.

pip uninstall torch

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu121

Griptape Not Updating

Sometimes you'll find that the Griptape library didn't get updated properly. This seems to be especially happening when using the ComfyUI Manager. You might see an error like:

ImportError: cannot import name 'OllamaPromptDriver' from 'griptape.drivers' (C:\Users\evkou\Documents\Sci_Arc\Sci_Arc_Studio\ComfyUi\ComfyUI_windows_portable\python_embeded\Lib\site-packages\griptape\drivers\__init__.py)

To resolve this, you must make sure Griptape is running with the appropriate version. Things to try:

- Update again via the ComfyUI Manager

- Uninstall & Re-install the Griptape nodes via the ComfyUI Manager

- In the terminal, go to your ComfyUI directory and type:

python -m pip install griptape -U - Reach out on Discord and ask for help.

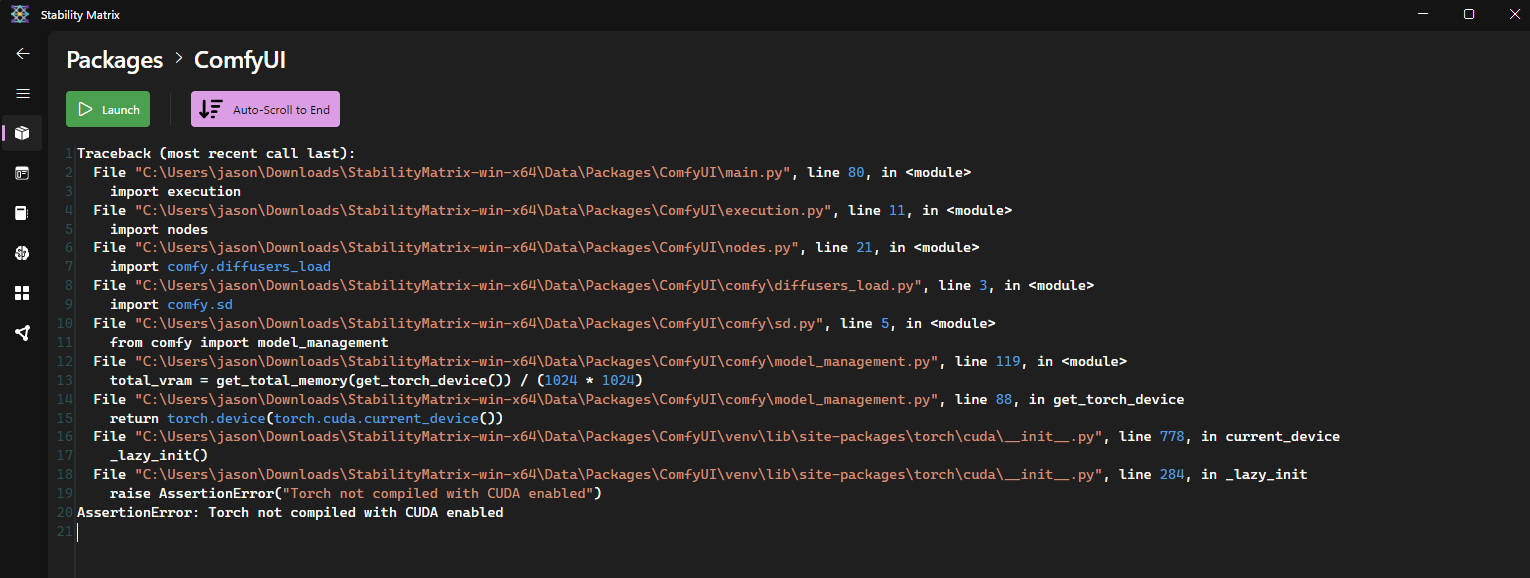

StabilityMatrix

If you are using StabilityMatrix to run ComfyUI, you may find that after you install Griptape you get an error like the following:

To resolve this, you'll need to update your torch installation. Follow these steps:

- Click on Packages to go back to your list of installed Packages.

- In the ComfyUI card, click the vertical

...menu. - Choose Python Packages to bring up your list of Python Packages.

- In the list of Python Packages, search for

torchto filter the list. - Select torch and click the

-button to uninstalltorch. - When prompted, click Uninstall

- Click the

+button to install a new package. - Enter the list of packages:

torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu121 - Click OK.

- Wait for the install to complete.

- Click Close.

- Launch ComfyUI again.

Thank you

Massive thank you for help and inspiration from the following people and repos!

- Jovieux from https://github.com/Amorano/Jovimetrix

- rgthree https://github.com/rgthree/rgthree-comfy

- IF_AI_tools https://github.com/if-ai/ComfyUI-IF_AI_tools/tree/main