Nodes Browser

ComfyDeploy: How ComfyUI-Zero123-Porting works in ComfyUI?

What is ComfyUI-Zero123-Porting?

Zero-1-to-3: Zero-shot One Image to 3D Object, unofficial porting of original [Zero123](https://github.com/cvlab-columbia/zero123)

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

ComfyUI-Zero123-Portingand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

ComfyUI Node for Zero-1-to-3: Zero-shot One Image to 3D Object

This is an unofficial porting of Zero123 https://zero123.cs.columbia.edu/ for ComfyUI, Zero123 is a framework for changing the camera viewpoint of an object given just a single RGB image.

This porting enable you generate 3D rotated image in ComfyUI.

Quickly Start

After install this node, download the sample workflow to start trying.

If you have any questions or suggestions, please don't hesitate to leave them in the issue tracker.

Node and Workflow

Node Zero123: Image Rotate in 3D

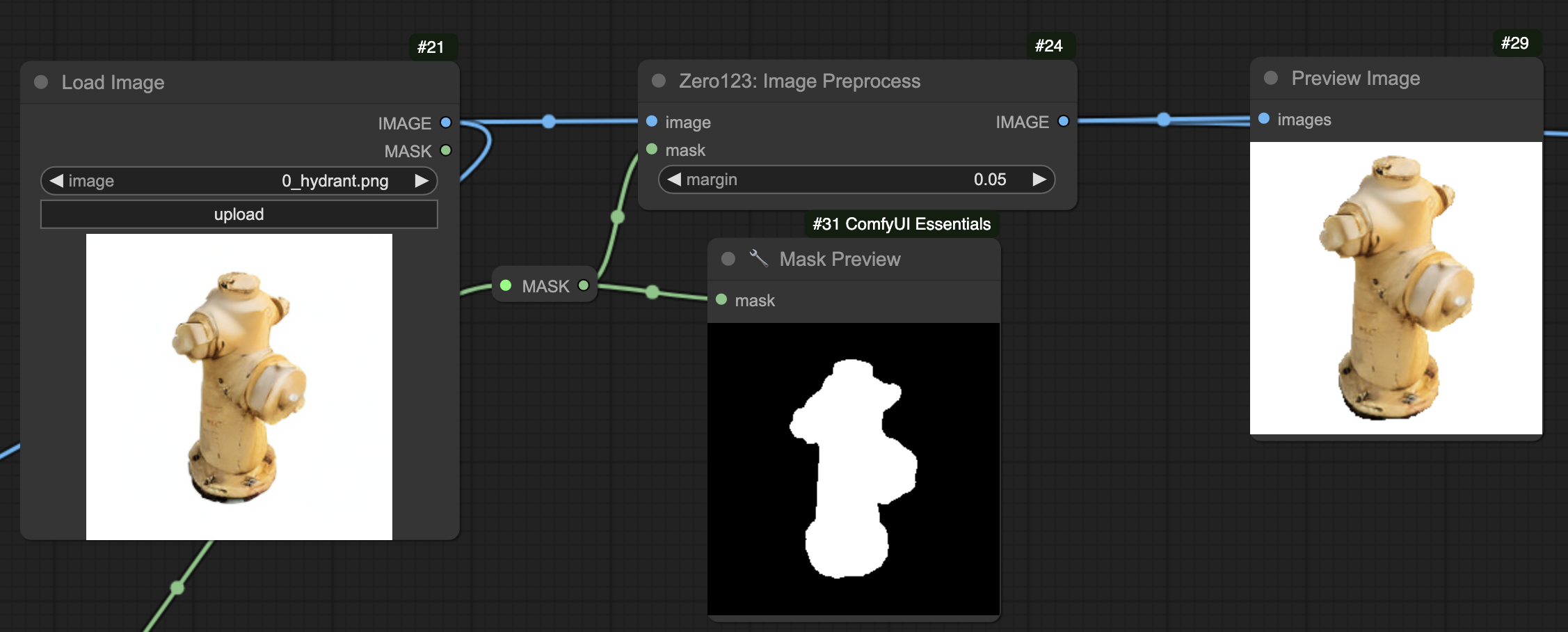

Node Zero123: Image Preprocess

PREREQUISITES

- INPUT

imagemustsquare(width=height), otherwise, this node will automatically trans it forcely. - INPUT

imageshould be anobjectwith white background, which means you need preprocess of image (use `Zero123: Image Preprocess). - OUTPUT

imageonly support256x256(fixed) currently, you can upscale it later.

Explains

Node Zero123: Image Rotate in 3D Input and Output

INPUT

- image : input image, should be an

squareimage, and anobjectwithwhite backgroup. - polar_angle : angle of

xaxis, turn up or down<0.0: turn up>0.0: turn down

- azimuth_angle : angle of

yaxis, turn left or right<0.0: turn left>0.0: turn right

- scale :

zaxis,far awayornear;>1.0: means bigger, ornear;0<1<1.0: means smaller, orfar away1.0: mean no change

- steps :

75is the default value by originalzero123repo, do not smaller then75. - batch_size : how many images you do like to generated.

- fp16 : whether to load model in

fp16. enable it can speed up and save GPU mem. - checkpoint : the model you select,

zero123-xlis the lates one, andstable-zero123claim to be the best, but licences required for commercially use. - height : output height, fix to 256, information only

- width : output width, fix to 256, information only

- sampler : cannot change, information only

- scheduler : cannot change, information only

OUTPUT

- images : the output images

Node Zero123: Image Preprocess Input and Output

INPUT

- image : the original input

image. - mask : the

maskof theimage. - margin : the

percentage(%)margin of output image.

OUTPUT

- image : the processed

white background, andsquareversion inputimagewith subject in center.

Tips

- for proprecess image, segment out the subject, and remove all backgroup.

- use image corp to focus the main subject, and make a squre image

- try multi images and select the best one

- upscale for final usage.

Installation

By ComfyUI Manager

Customer Nodes

search zero123 and select this repo, install it.

Models

search zero123 and install the model you like, such as zero123-xl.ckpt and stable-zero123 (licences required for commercially).

Manually Installation

Customer Nodes

cd ComfyUI/custom_nodes

git clone https://github.com/kealiu/ComfyUI-Zero123-Porting.git

cd ComfyUI-Zero123-Porting

pip install -r requirements.txt

And then, restart ComfyUI, and refresh your browser.

Models

check out model-list.json for modules download URL, their should be place under ComfyUI/models/checkpoints/zero123/

Zero123 related works

zero123by zero123, the original one. This repo porting from this one.stable-zero123by StableAI, which train models with more data and claim to have better output.zero123++by Sudo AI, which opensource a model that always gen image with fix angles.

Thanks to

Zero-1-to-3: Zero-shot One Image to 3D Object, which be able to learn control mechanisms that manipulate the camera viewpoint in large-scale diffusion models

@misc{liu2023zero1to3,

title={Zero-1-to-3: Zero-shot One Image to 3D Object},

author={Ruoshi Liu and Rundi Wu and Basile Van Hoorick and Pavel Tokmakov and Sergey Zakharov and Carl Vondrick},

year={2023},

eprint={2303.11328},

archivePrefix={arXiv},

primaryClass={cs.CV}

}