Nodes Browser

ComfyDeploy: How ComfyUI AnyNode: Any Node you ask for works in ComfyUI?

What is ComfyUI AnyNode: Any Node you ask for?

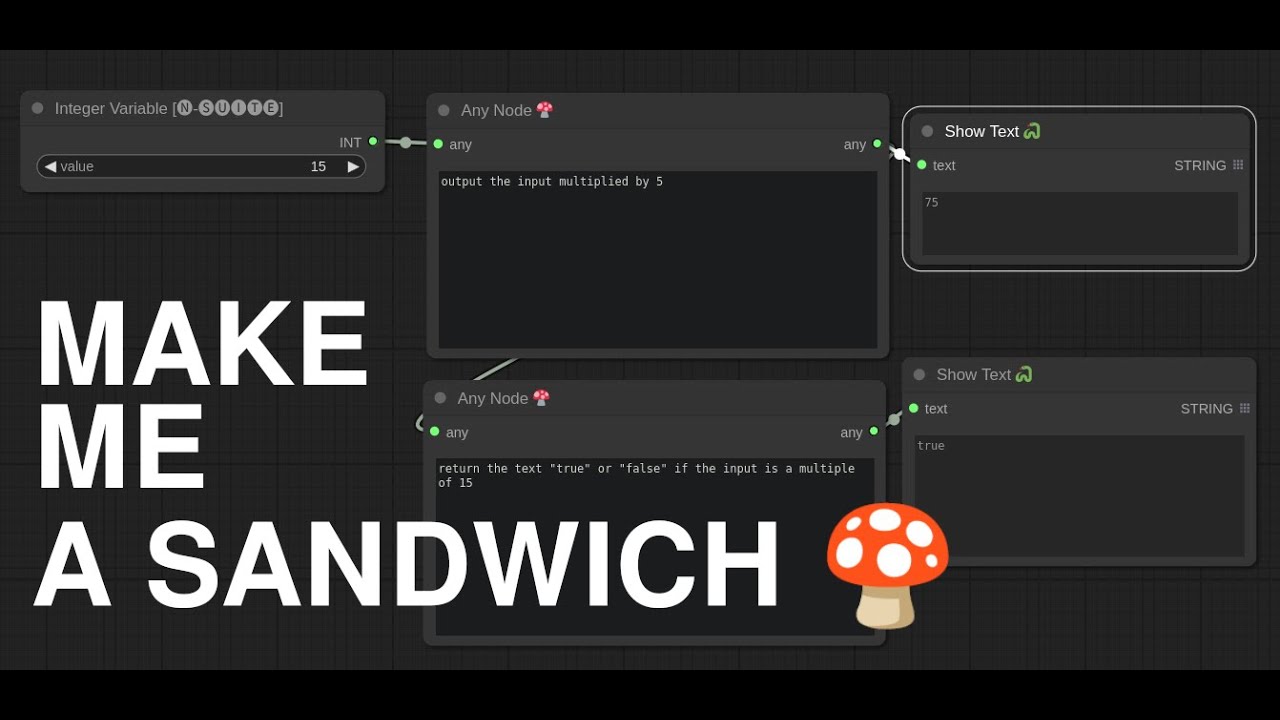

Nodes: AnyNode. Nodes that can be anything you ask. Auto-Generate functional nodes using LLMs. Create impossible workflows. API Compatibility: (OpenAI, LocalLLMs, Gemini).

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

ComfyUI AnyNode: Any Node you ask forand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

AnyNode v0.1 (🍄 beta)

A ComfyUI Node that uses the power of LLMs to do anything with your input to make any type of output.

📺 More Tutorials on AnyNode at YouTube

Join our Discord

Install

- Clone this repository into

comfy/custom_nodesor Just search forAnyNodeon ComfyUI Manager - If you're using openAI API, follow the OpenAI instructions

- If you're using Gemini, follow the Gemini Instructions

- If you're using LocalLLMs API, make sure your LLM server (ollama, etc.) is running

- Restart Comfy

- In ComfyUI double-click and search for

AnyNodeor you can find it in Nodes > utils

OpenAI Instructions

- Make sure you have the

openaimodule installed through pip:pip install openai - Add your

OPENAI_API_KEYvariable to your Environment Variables. How to get your OpenAI API key

AnyNode 🍄 Is the node that directly uses OpenAI with the latest ChatGPT (whichever that may be at the time)

Gemini Instructions

- You don't need any extra module, so don't worry about that

- Add your

GOOGLE_API_KEYvariable to your Environment Variables. How to get your Google API key

AnyNode 🍄 (Gemini) is still being tested so it probably contains bugs. I will update this today.

Local LLMs

We now have an

AnyNode 🍄 (Gemini) Node and our big star: The AnyNode 🍄 (Local LLM) Node.

This was the most requested feature since Day 1. The classic AnyNode 🍄 will still use OpenAI directly.

- You can set each LocalLLM node to use a different local or hosted service as long as it's OpenAI compatible

- This means you can use Ollama, vLLM and any other LocalLLM server from wherever you want

A Note about Security for the Local LLM variant

The way that AnyNode works, is that it executes code which happens externally from python that is coming back from the server on a ChatCompletions endpoint. To put that into perspective, wherever you point it, you are giving some sort of control in python to that place. BE CAREFUL that if you are not pointing it to localhost that you absolutely trust the address that you put into server.

How it Works

- Put in what you want the node to do with the input and output.

- Connect it up to anything on both sides

- Hit

Queue Promptin ComfyUI

AnyNode codes a python function based on your request and whatever input you connect to it to generate the output you requested which you can then connect to compatible nodes.

Update: It can make you a sandwich

Courtesy of synthetic ape

Warning: Because of the ability to link ANY node, you can crash ComfyUI if you are not careful.

🛡️ Security Features

You shouldn't trust an LLM with your computer, and we don't either.

Code Sanizitzer Every piece of code that the LLM outputs goes through a sanitizer before being allowed to be loaded into the environment or executed. You will see errors about dangerous code... that's the sanitizer.

No Internet, No Files, No Command Line As a safety feature, AnyNode does not have the ability to generate functions that browse the internet or touch the files on your computer. If you need to load something into comfy or get stuff from the internet, there are plenty of loader nodes available in popular node packs on Manager.

Curated Imports We only let AnyNode use libraries from the list of Allowed Imports. Anything else will not even be within the function's runtime environment and will give you an error. This is a feature. If you want libraries you don't see in that list to be added to AnyNode, let us know on the Discord or open an Issue.

Note: AnyNode can use the openai and google generativeAI libraries in the functions it generates, so you can ask it to use the latest from OpenAI by pasting an example from their API and get it to stream a TTS audio file to your computer, that is a supported library and it's fine.

🤔 Caveats

- I have no idea how far you can take this nor it's limits

- LLMs can't read your mind. To make complex stuff in one node you'd have to know a bit about programming

- The smaller the LLM you use to code your nodes, the less coding skills it might have

- Right now you can only see code the LLM generates in the console

- ~~Can't make a sandwich~~

💪 Strengths

- Use OpenAI

AnyNode 🍄, Local LLMsAnyNode 🍄 (Local LLM), GeminiAnyNode 🍄 (Gemini) - You can use as many of these as you want in your workflow creating possibly complex node groups

- Really great at single purpose nodes

- Uses OpenAI API for simple access to the latest and greatest in generation models

- Technically you could point this at vLLM. LM Studio or Ollama for you LocalLLM fans

- Can use most of the popular python libraries and most standard like (numpy, torch, collections, re)

- Ability to make more complex nodes that use inputs like MODEL, VAE and CLIP with input type awareness

- Error Mitigation: Auto-correct errors it made in code (just press

Queue Promptagain) - Incremental Code editing (the last generated function serves as example for next generation)

- Copy cool nodes you prompt is as easy as copying the workflow

- Saves generated functions registry

jsontooutput/anynodeso you can bundle it with workflow - Can make more complex functions with two optional inputs to the node.

- IT CAN MAKE A SANDWICH!

🛣️ Roadmap

- Export to Node: Compile a new comfy node from your AnyNode (Requires restart to use your new node)

- Downstream Error Mitigation: Perform error mitigation on outputs to other nodes (expectation management)

- RAG based function storage and semantic search across comfy modules (not a pipe dream)

- Persistent data storage in the AnyNode (functions store extra data for iterative processing or persistent memory)

- Expanding NodeAware to include full Workspace Awareness

- Node Recommendations: AnyNode will recommend you or even load some nodes into the workflow based on your input

Coding Errors you Might Encounter

As with any LLMs or text generating language model, when it comes to coding, it can sometimes make mistakes that it can't fix by itself even if you show it the error of it's ways. A lot of these can be mitigated by modifying your prompt. If you encounter some of the known ones, we have some prompt engineering solutions here for you.

For this I recommend that you Join our Discord and report the bug there. Often times AnyNode will fix the bug if it happened within your generated function if you just click Queue Prompt again.

If you're still here

Let's enjoy some stuff I made while up all night!

This one, well... the prompts explain it all, but TLDR; It takes an image as input and outputs only the red channel of that image.

Here I use three AnyNodes: One to load a file, one to summarize the text in that file, and the other to just do some parsing of that text. No coding needed.

I took that Ant example a bit further and added in the normal nodes to do img2img with my color transforms from AnyNode

Here I ask for an instagram-like sepia tone filter for my AnyNode ... I titled the node Image Filter just so I can remember what it's supposed to be doing in the workflow

Let's try a much more complex description of an HSV transform, but still in plain english. And we get a node that will randomly filter HSV every time it's run!

Here's that workflow

Then I ask for a more legacy instagram filter (normally it would pop the saturation and warm the light up, which it did!)

How about a psychedelic filter?

Here I ask it to make a "sota edge detector" for the output image, and it makes me a pretty cool Sobel filter. And I pretend that I'm on the moon.

Here's that workflow