Nodes Browser

ComfyDeploy: How comfyui-ollama-nodes works in ComfyUI?

What is comfyui-ollama-nodes?

ComfyUI custom nodes for working with [a/Ollama](https://github.com/ollama/ollama). NOTE:Assumes that an Ollama server is running at http://127.0.0.1:11434 and accessible by the ComfyUI backend.

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

comfyui-ollama-nodesand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

comfyui-ollama-nodes

Add LLM workflows, including image recognition (vision), to ComfyUI via Ollama

Currently, it assumes that an Ollama server is running at http://127.0.0.1:11434 and accessible by the ComfyUI backend.

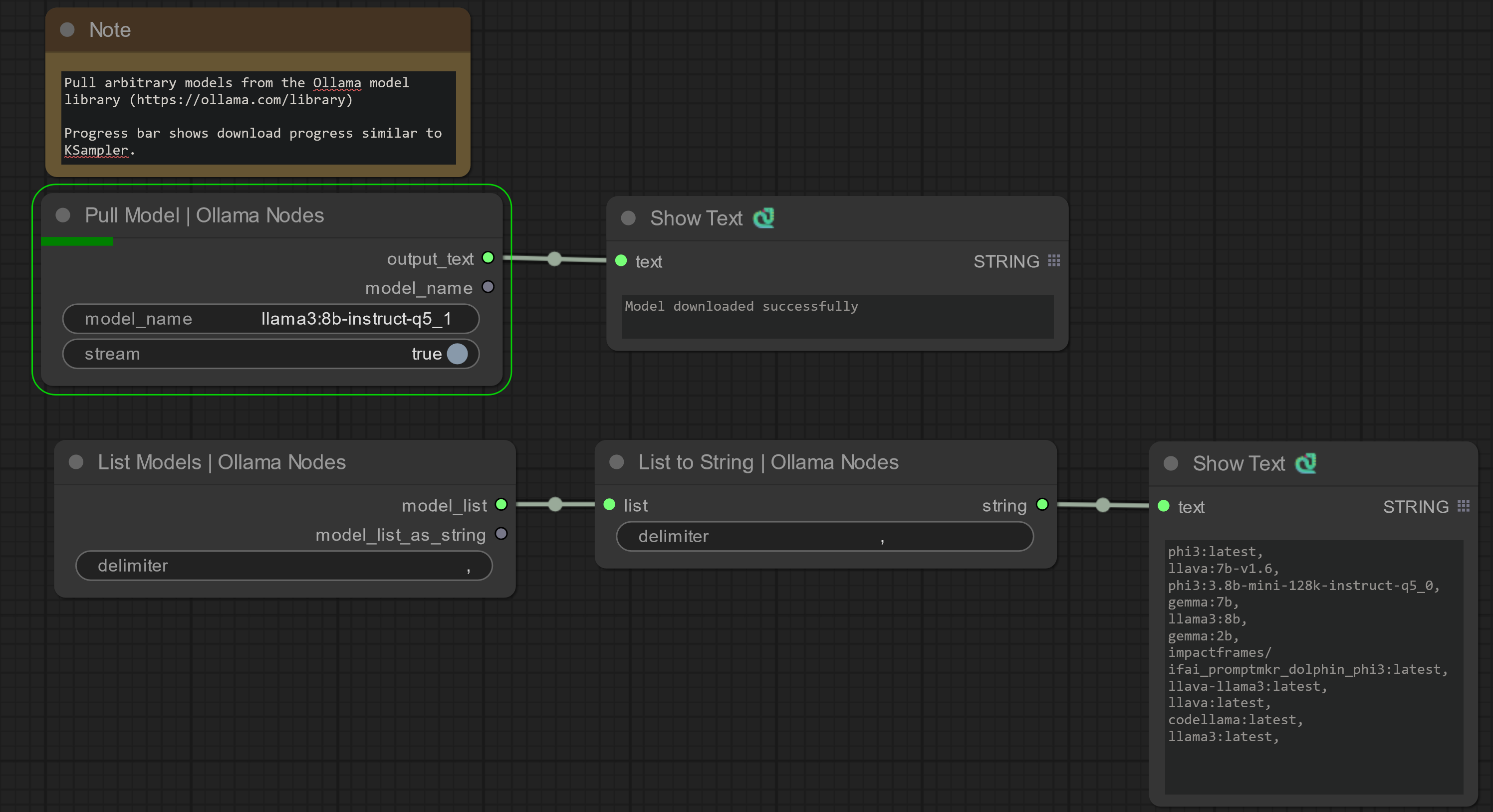

Screenshots

Pulling models from the Ollama Model Libary with download progress bar:

Generating text descriptions of loaded images:

TODO:

- ✅ Implement model pulling node

- ✅ Implement UI progress bar updates when pulling with

stream=True

- ✅ Implement UI progress bar updates when pulling with

- ✅ Impelement Huggingface Hub model downloader node

- ⬜ Implement progress bar updates when download models

- ✅ ~~Implement model loading node~~ Theyre loaded dynamically on generate, it's good to unload them from GPU to leave room for image generation models in the same workflow.

- ✅ Implement generate node

- ⬜ Impelment generate node with token streaming (I think it's just a UI limitation with ShowText class)

- ✅ Implement generate node with vision model (can take image batch as input!)

- ⬜ Implement chat node (likely requires new frontent node development)

- ⬜ Implement model converter node (saftetensor to GGUF)

- ⬜ Implement quantization node

- ⬜ Test compatability with SaltAI LLM tools (LlamaIndex)

Similar Nodes

The following node packs are similar and effort will be made to integrate seemlessly with them:

- https://github.com/daniel-lewis-ab/ComfyUI-Llama

- https://github.com/get-salt-AI/SaltAI_Language_Toolkit

- https://github.com/alisson-anjos/ComfyUI-Ollama-Describer

Development

If you'd like to contribute, please open a Git Issue describing what you'd like to contribute. See ComfyOrg docs for instructions on getting started developing custom nodes.

Attributions

- logger.py taken from https://github.com/Kosinkadink/ComfyUI-VideoHelperSuite, GPL-3.0 license

- Ollama MIT license, based on llama.cpp MIT license

- huggingface_hub python library Apache-2.0 license