Nodes Browser

ComfyDeploy: How V-Express: Conditional Dropout for Progressive Training of Portrait Video Generation works in ComfyUI?

What is V-Express: Conditional Dropout for Progressive Training of Portrait Video Generation?

[Original] In the field of portrait video generation, the use of single images to generate portrait videos has become increasingly prevalent. A common approach involves leveraging generative models to enhance adapters for controlled generation. However, control signals can vary in strength, including text, audio, image reference, pose, depth map, etc. Among these, weaker conditions often struggle to be effective due to interference from stronger conditions, posing a challenge in balancing these conditions. In our work on portrait video generation, we identified audio signals as particularly weak, often overshadowed by stronger signals such as pose and original image. However, direct training with weak signals often leads to difficulties in convergence. To address this, we propose V-Express, a simple method that balances different control signals through a series of progressive drop operations. Our method gradually enables effective control by weak conditions, thereby achieving generation capabilities that simultaneously take into account pose, input image, and audio. NOTE: You need to downdload [a/model_ckpts](https://huggingface.co/tk93/V-Express/tree/main) manually.

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

V-Express: Conditional Dropout for Progressive Training of Portrait Video Generationand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

V-Express: Conditional Dropout for Progressive Training of Portrait Video Generation

<a href='https://tenvence.github.io/p/v-express/'><img src='https://img.shields.io/badge/Project-Page-green'></a> <a href='https://tenvence.github.io/p/v-express/'><img src='https://img.shields.io/badge/Technique-Report-red'></a> <a href='https://huggingface.co/tk93/V-Express'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Model-blue'></a>

<!-- [](https://github.com/tencent-ailab/IP-Adapter/) -->Introduction

In the field of portrait video generation, the use of single images to generate portrait videos has become increasingly prevalent. A common approach involves leveraging generative models to enhance adapters for controlled generation. However, control signals can vary in strength, including text, audio, image reference, pose, depth map, etc. Among these, weaker conditions often struggle to be effective due to interference from stronger conditions, posing a challenge in balancing these conditions. In our work on portrait video generation, we identified audio signals as particularly weak, often overshadowed by stronger signals such as pose and original image. However, direct training with weak signals often leads to difficulties in convergence. To address this, we propose V-Express, a simple method that balances different control signals through a series of progressive drop operations. Our method gradually enables effective control by weak conditions, thereby achieving generation capabilities that simultaneously take into account pose, input image, and audio.

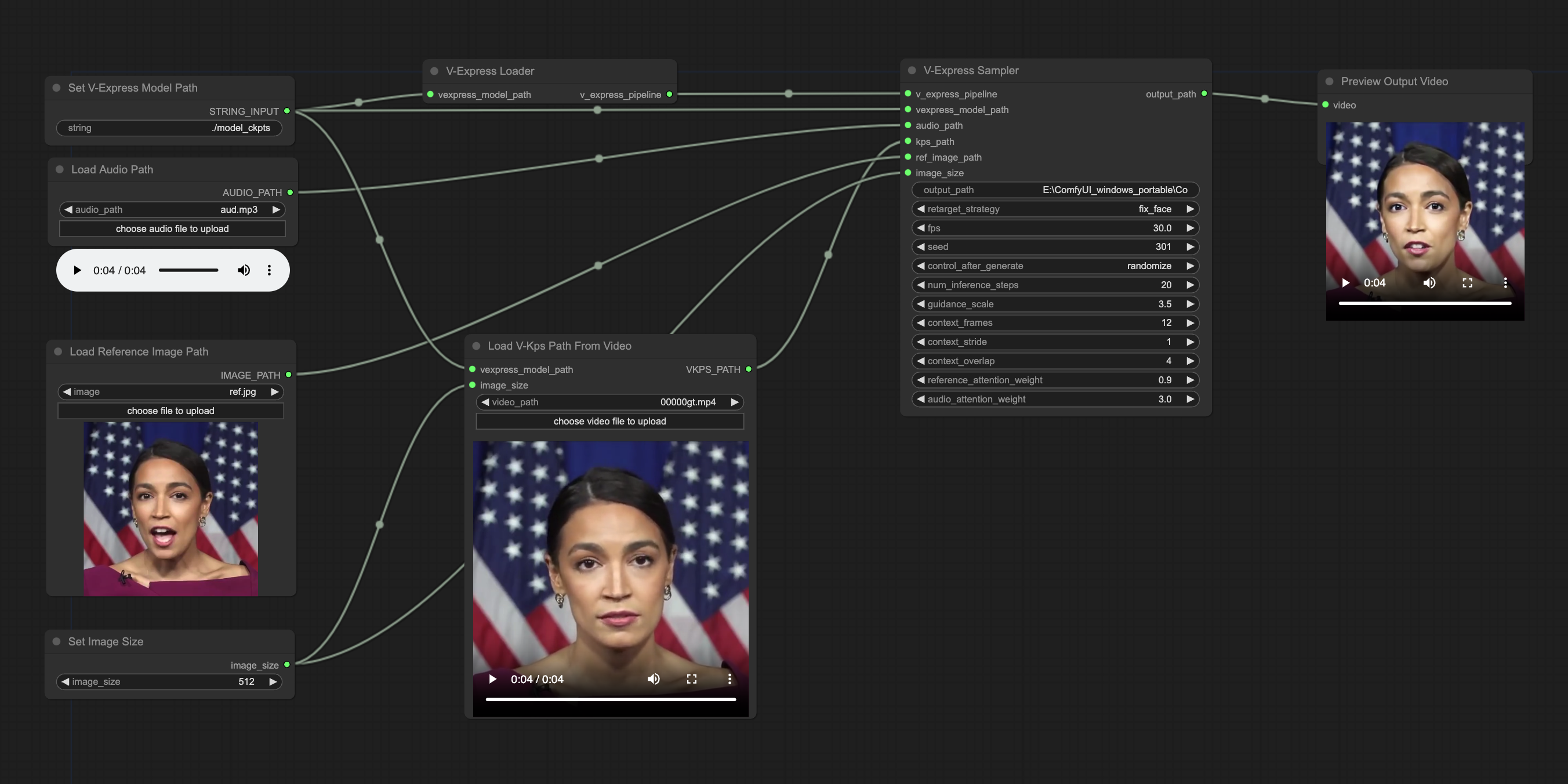

Workflow

Installation

-

Clone this repo into the Your ComfyUI root directory\ComfyUI\custom_nodes\ and install dependent Python packages from here:

cd Your_ComfyUI_root_directory\ComfyUI\custom_nodes\ git clone https://github.com/tiankuan93/ComfyUI-V-Express pip install -r requirements.txtIf you are using ComfyUI_windows_portable , you should use

.\python_embeded\python.exe -m pipto replacepipfor installation.If you got error regards insightface, you may find solution here.

- first, you should download .whl file here

- then, install it by

.\python_embeded\python.exe -m pip install --no-deps --target=\your_path_of\python_embeded\Lib\site-packages [path-to-wheel]

-

Download V-Express models and other needed models:

- model_ckpts

- You need to replace the model_ckpts folder with the downloaded V-Express/model_ckpts. Then you should download and put all

.binmodel tomodel_ckpts/v-expressdirectory, which includesaudio_projection.bin,denoising_unet.bin,motion_module.bin,reference_net.bin, andv_kps_guider.bin. The final model_ckpts folder is as follows:

./model_ckpts/ |-- insightface_models |-- sd-vae-ft-mse |-- stable-diffusion-v1-5 |-- v-express |-- wav2vec2-base-960h -

You should put the files in input directory into the Your ComfyUI Input root

directory\ComfyUI\input\. -

You need to set

output_pathasdirectory\ComfyUI\output\xxx.mp4, otherwise the output video will not be displayed in the ComfyUI.

Acknowledgements

We would like to thank the contributors to the AIFSH/ComfyUI_V-Express, for the open research and exploration.