Nodes Browser

ComfyDeploy: How Custom nodes for llm chat with optional image input works in ComfyUI?

What is Custom nodes for llm chat with optional image input?

A custom node for ComfyUI that enables Large Language Model (LLM) chat interactions with optional image input support.

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

Custom nodes for llm chat with optional image inputand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

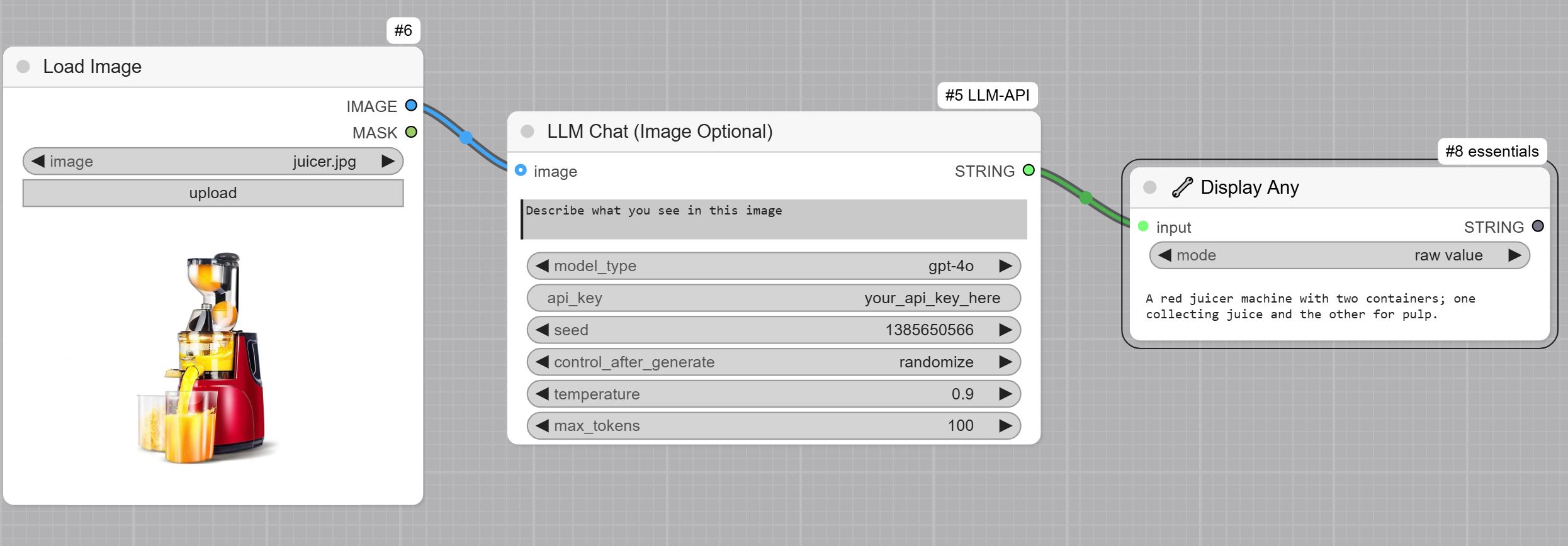

ComfyUI LLM Chat Node

A custom node for ComfyUI that enables Large Language Model (LLM) chat interactions with optional image input support. This node allows you to interact with various text and vision language models directly within your ComfyUI workflows.

<img src="assets/node.jpg" width="400"/>

Features

- Support for both text-only and vision-enabled language models

- Configurable model parameters (temperature, max tokens)

- Optional image input support

- Adjustable random seed for consistent outputs

- Easy integration with existing ComfyUI workflows

Installation and Usage

- Navigate to ComfyUI/custom_nodes folder in terminal or command prompt.

- Clone the repo using the following command:

git clone https://github.com/ComfyUI-Workflow/ComfyUI-OpenAI - Go to

custom_nodes/COMFYUI-LLM-APIand install depedencies by runningpip install -r requirements.txt - Restart ComfyUI

Example use cases: